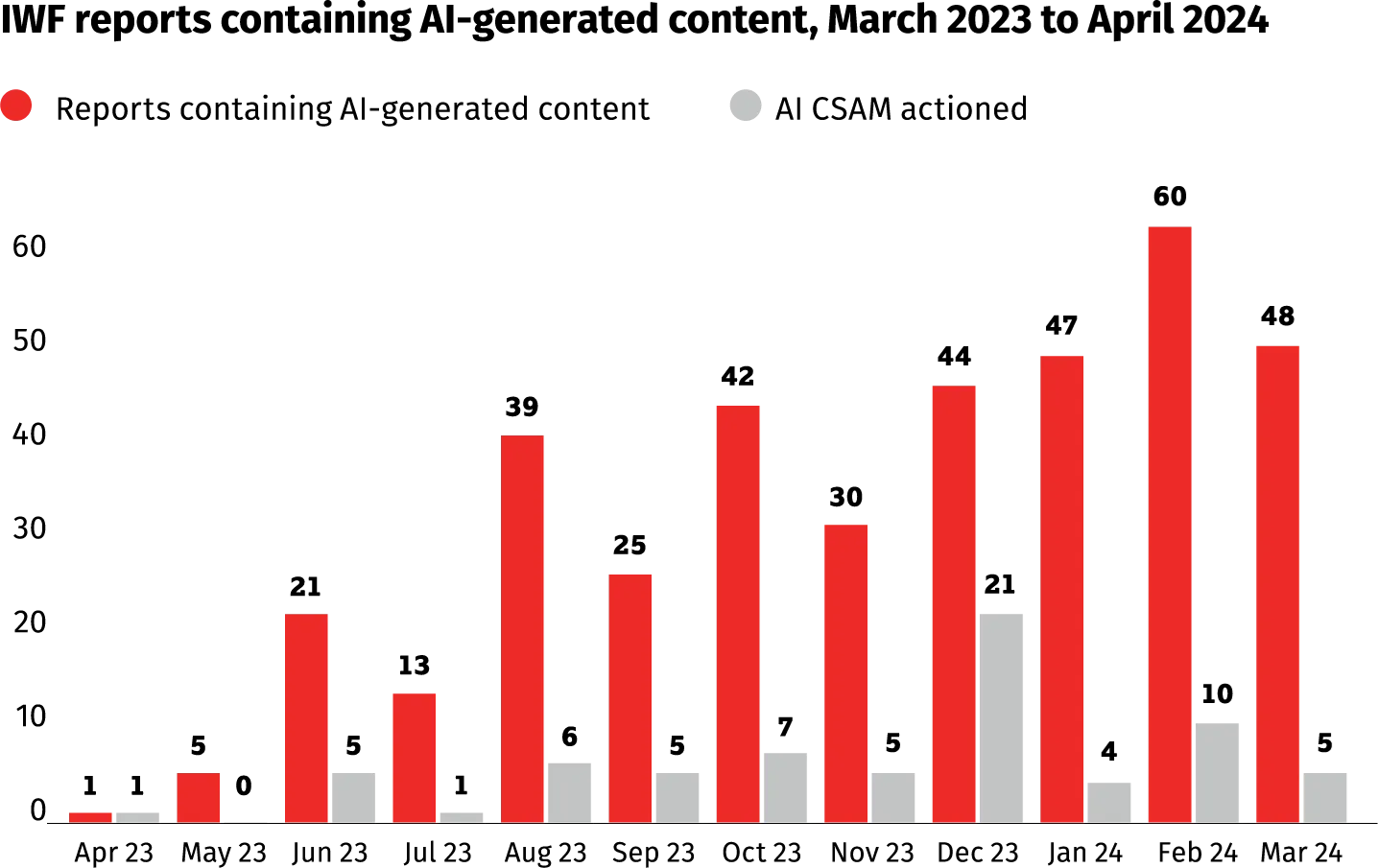

A new report from the Internet Watch Foundation (IWF) has revealed a disturbing trend in the online world of child exploitation. The UK-based organization, which monitors and combats online child sexual abuse, has found a significant rise in AI-generated content depicting the sexual exploitation of minors.

This isn’t just about still images anymore. The IWF’s latest findings, highlighted by NBC News, show that offenders are now using AI to create shockingly realistic “deepfake” videos. These videos often superimpose a child’s face onto existing adult content or, even more disturbingly, onto real footage of child abuse.

Let’s be clear: this is a major problem that’s evolving faster than many of us realized. In a 30-day review this spring, the IWF found 3,512 AI-generated child sexual abuse material (CSAM) images and videos on a single dark web forum. That’s a 17% increase from a similar review conducted just six months earlier. Even more concerning, the content is becoming more extreme, with a higher percentage depicting explicit or severe acts.

Dan Sexton, the IWF’s chief technology officer, warns that “Realism is improving. Severity is improving. It’s a trend that we wouldn’t want to see.”

While fully synthetic videos still look unrealistic, the technology is advancing rapidly. Currently, predators often use existing CSAM footage to train specialized AI algorithms, creating custom deepfakes from just a few images or short video clips. This practice causes ongoing harm to survivors, as footage of their abuse is given new life in these AI-generated scenarios.

“Some of these are victims that were abused decades ago. They’re grown-up survivors now,” Sexton noted, highlighting the long-lasting impact of this technology.

The rise of AI-generated CSAM poses significant challenges for law enforcement and tech companies. While major AI firms have pledged to follow ethical guidelines, countless smaller, free-to-use AI programs are readily available online. These tools are often behind the content found by the IWF.

This trend could make it harder to track offenders. Social media platforms and law enforcement often rely on scanning new images against databases of known CSAM. However, deepfaked content may slip past these safeguards.

Legal experts are grappling with how to address this issue. While possessing CSAM imagery, AI-generated or not, is clearly illegal in the US, prosecuting those who create it using AI may prove more complex. Paul Bleakley, an assistant professor of criminal justice at the University of New Haven, points out that harsher penalties for CSAM creators might be harder to apply in AI-generated cases.

With companies even pushing for AI to take over social media, it’s clear that we’re way past introductory stage of truly smart AI on the web. But luckily, governments and law enforcement aren’t letting concerning AI platforms and tools to thrive as they exploit users. Last week, we highlighted a development where the FTC forced developers of an app that let users “vet” their dates for STIs to shut down the app due to various privacy concerns and data accuracy issues.

So it’s clear that the fight against AI-generated CSAM will require a coordinated effort from governments, tech companies, and society as a whole.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.