Yes Apple, this transcription is very useful. 🤭 pic.twitter.com/vC9vEGnk7L

— Varun Mirchandani (@beingmirchi) June 12, 2024

Apple has finally embraced artificial intelligence (AI) in a big way with the announcement of “Apple Intelligence” at WWDC 2024. This new suite of AI features aims to bring more conversational and capable AI assistants, AI-generated content creation tools, and enhanced privacy to iPhones, Macs, and iPads. But how does it stack up against Google’s existing AI offerings on Android devices? Let’s take a deep dive into the key Apple Intelligence features and compare them to Google’s AI capabilities.

Siri gets a major AI upgrade

One of the centerpieces of Apple Intelligence is a revamped Siri with significant AI enhancements. Apple claims that the new Siri will be more conversational, thanks to improved natural language understanding capabilities. This means users can speak to Siri more naturally, without having to worry about stumbling over words or phrasing their requests perfectly.

Google Assistant, on the other hand, has been capable of understanding natural language for several years now. The company has continuously improved its language understanding models, allowing users to converse with the Assistant in a more natural and conversational manner.

Apple is also introducing “Personal Context” for Siri, which will allow the assistant to draw information from users’ photos, calendar events, messages, and other apps to provide more personalized and contextual responses. For instance, if a user asks about their mom’s flight details, Siri will cross-reference flight information from emails to provide an up-to-date arrival time.

Google Assistant has had similar capabilities for a while, with the ability to pull information from users’ calendars, emails, and other data sources to provide relevant and personalized responses. However, Google Assistant won’t be able to pull off tricks like the ones Apple demoed at its event. So, as things stand, it seems Siri is pulling ahead of Google Assistant and Gemini when it comes to personal context. The Verge’s video rounding up the biggest announcements from WWDC 2024 highlights all the demos that you can check out below:

Typing to Siri is another new feature that Apple is introducing, allowing users to interact with the assistant through text input instead of voice commands. This could be useful in situations where voice input is not convenient or appropriate.

Google Assistant has supported text input for years, offering users the flexibility to type or speak their commands and queries.

But the most impressive and useful new feature, in my humble opinion, is Siri’s “onscreen awareness”. Siri can understand things on your screen and take actions. Imagine you’re texting with a friend who just moved and sends their new address. With Siri powered by Apple Intelligence on your iPhone, you can skip the manual entry hassle. Simply say something like “Add this address to [friend’s name]’s contact card” or “Let me save this info for [friend’s name].” The phone’s AI features can then recognize the address and offer to update your friend’s contact information, saving you both time and effort.

AI writing and image generation tools

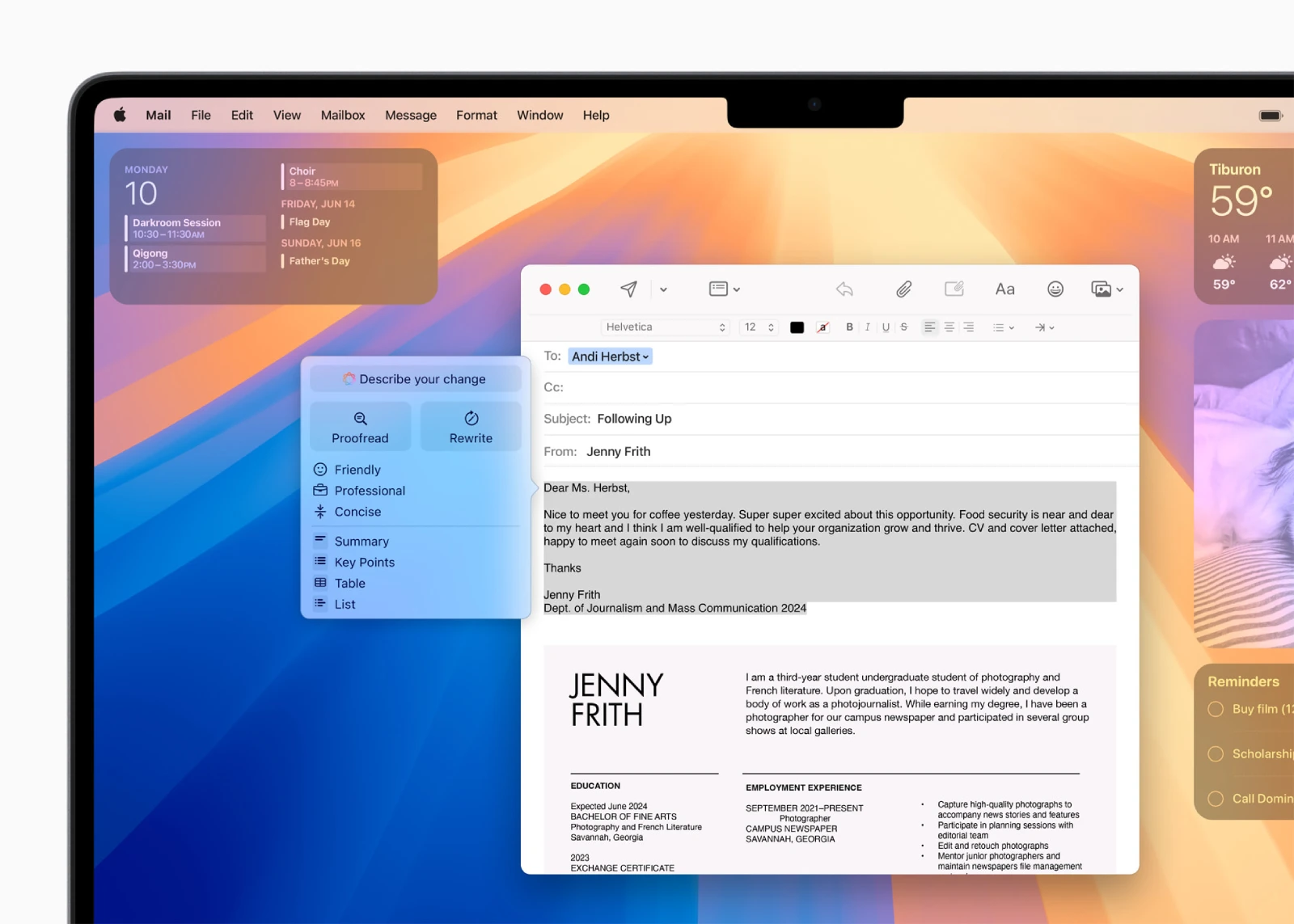

Apple Intelligence will bring a range of AI-powered writing and image generation tools to Apple devices. The Writing Tools feature will allow users to change the tone of their writing (e.g., friendly, professional, concise) and generate summaries or key points from longer text.

Google has offered similar AI writing assistance through its Smart Compose and Smart Reply features in Gmail and other apps. These tools suggest complete sentences or replies based on the context of the conversation or email.

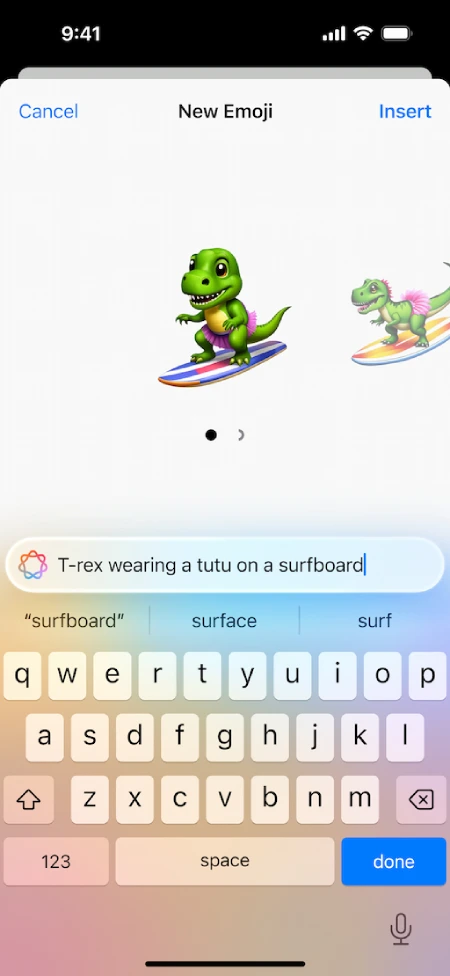

For image generation, Apple is introducing “Genmoji,” which allows users to create custom emoji-like reactions by typing a description of the desired image. The “Image Playground” feature lets users generate images from text prompts and apply various styles to the generated images.

Google has had similar text-to-image generation capabilities through the Gemini app, AI Test Kitchen app and web-based tools like Imagen and Parti. However, these tools are not yet deeply integrated into Android’s core experiences like Apple’s Image Playground and Genmoji.

Notifications with summarization

iOS 18 utilizes AI to analyze notifications and prioritize them based on user behavior and preferences. It can also group and summarize notifications, providing a concise overview and reducing notification clutter.

Android offers notification prioritization based on user interaction and app settings. However, the level of AI-powered summarization and grouping seen in iOS 18 isn’t currently available.

The enhanced notification system in iOS 18 aims to reduce the cognitive load on users by intelligently managing and summarizing notifications. While Android does offer some prioritization capabilities, Apple’s use of AI to summarize and present notifications contextually sets a new standard for user experience.

Integration with ChatGPT (GPT-4)

One of the most significant announcements from Apple is the integration of OpenAI’s ChatGPT (powered by GPT-4) into Apple Intelligence. When Siri cannot handle a user’s request, it will prompt the user to use ChatGPT, sending the query, documents, or photos to OpenAI’s servers for processing. This integration will give Apple users access to the powerful language model behind ChatGPT, enabling more advanced question answering, writing assistance, and even image generation capabilities.

Google gives Android users most of the same capabilities thanks to the Gemini app. This is precisely where Google shines. Users don’t have to have the latest or greatest Android phone to leverage Gemini’s powerfuly AI capabilities. On the other hand, only users with the iPhone 15 Pro models, and iPad and Mac with M1 and later users, with Siri and device language set to U.S. English can use Apple Intelligence.

Of course, Apple might expand the availability of Apple Intelligence in other languages later down the line, but it’s unlikely that we’ll see it land on older iPhones.

Privacy and on-device processing

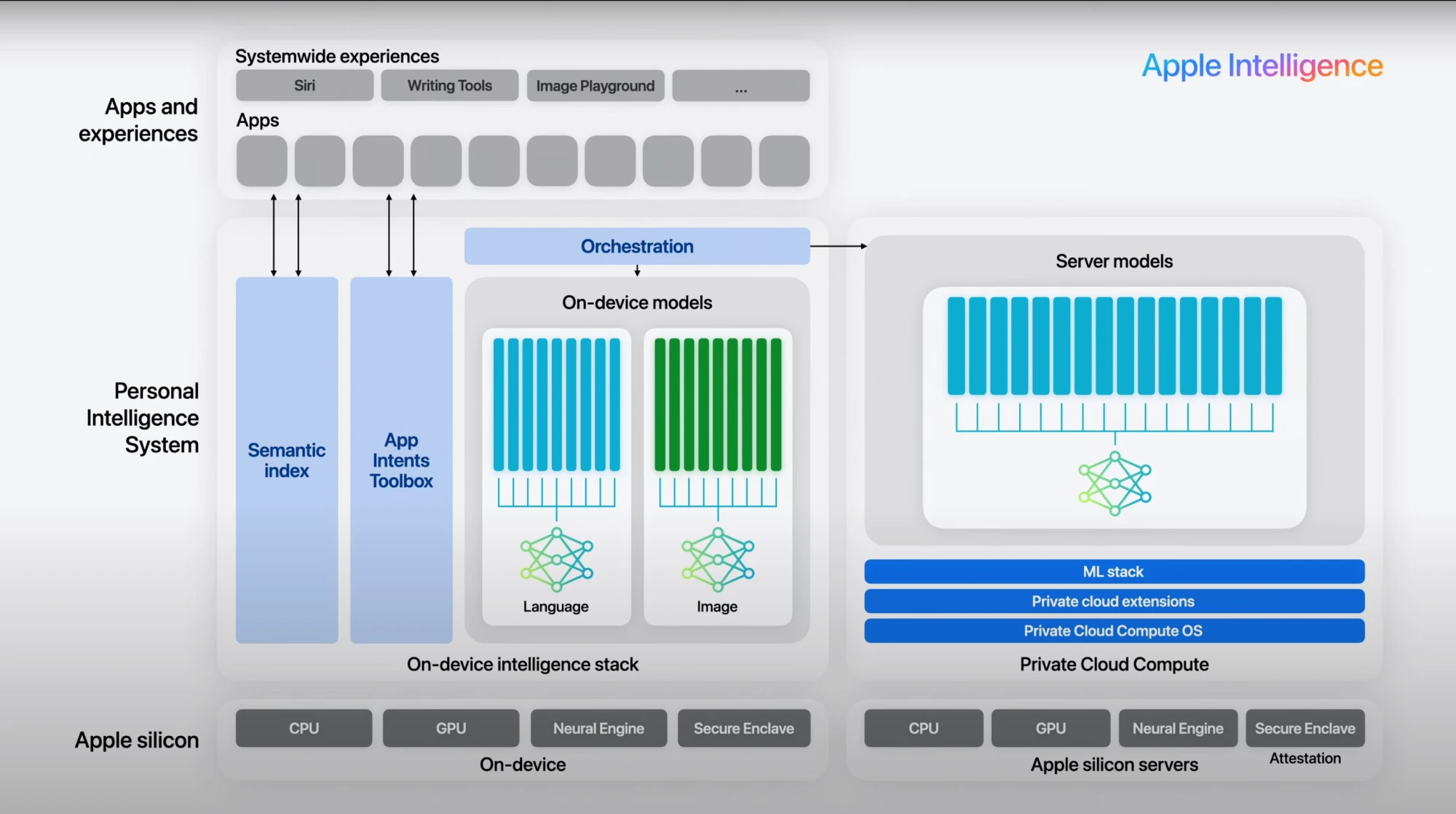

Apple is heavily emphasizing privacy as a key differentiator for its AI offerings. The company claims that many Apple Intelligence features will run on-device, without sending data to the cloud. When cloud processing is required, Apple says it will use a “Private Cloud Compute” system, where the models run on Apple Silicon servers, and the company will never store or access user data.

Additionally, Apple plans to allow independent experts to inspect the code running on its servers to verify privacy claims. This includes making virtual images of the Private Cloud Compute builds publicly available for inspection by security researchers.

Google, on the other hand, has faced scrutiny and criticism over its data collection and privacy practices. While the company has made efforts to improve privacy and offer on-device AI processing in some cases, it heavily relies on cloud-based models and data processing for many of its AI services. Android users may have concerns about the privacy implications of using Google’s AI offerings, especially when dealing with sensitive information or personal data.

But things are changing quickly for Google, too. The company recently rolled out Gemini Nano support for the Pixel 8 and Pixel 8a with the June Feature Drop. Furthermore, earlier this year CNBC reported that we can expect to see more advanced Gemini models on Android phones in 2025. This development could bring a host of on-device capabilities that can give Apple Intelligence a run for its money.

Availability and compatibility

As mentioned above, Apple Intelligence features will initially be available only on the iPhone 15 Pro and 15 Pro Max models, as well as iPads and Macs equipped with M1 or later chips. Additionally, the features will be limited to devices with their language set to English at launch.

Apple is apparenty unable to provide its AI to older iPhone models because it relies on a sophisticated machine learning model (SLM) with 3 billion parameters, which requires around 6 GB of RAM. Given that iOS itself consumes 1 GB of RAM, this leaves less than 6 GB available on devices with only 6 GB of RAM. The supported models utilize advanced quantization and efficiency techniques to handle this high RAM demand, ensuring the model runs efficiently. Consequently, these requirements exceed the capabilities of iPhones with just 6 GB of RAM, which is why such models are not supported. For those interested, you can check out all the technical details in Apple’s latest Platforms State of the Union video.

This decision is already drawing in some criticsm from Apple users, but we’ll have to wait and see the extent of the backlash once iOS 18 goes live in its stable form.

Google’s AI services, on the other hand, are generally available across a wide range of Android devices, with support for multiple languages and regions. However, Google’s on-device AI is only available on its latest Pixel 8 series and a few other select flagship Android phones. So both Google and Apple seem to be betting on AI to force users to upgrade to their newer models.

Performance and user experience

While Apple has provided details on the capabilities of Apple Intelligence, it remains to be seen how well these features will perform in real-world usage. User experience is a crucial factor, and Apple’s tight integration of hardware and software could give it an advantage in delivering a seamless and responsive AI experience.

Google, with its extensive experience in AI and machine learning, has generally been praised for the performance and accuracy of its AI services. However, the user experience can sometimes feel disjointed or inconsistent across different Android devices and software versions. For instance, Google’s much-hyped Video Boost feature was taking hours to process videos when it went live.

Ecosystem lock-in

One potential concern with Apple Intelligence is the risk of ecosystem lock-in. As Apple deepens the integration of AI into its devices and services, it may become increasingly difficult for users to switch to other platforms without sacrificing these advanced AI capabilities.

Google, on the other hand, offers many of its AI services across various platforms, including iOS and the web, allowing users to access these capabilities regardless of their device or operating system choice.

Other tricks up Google’s sleeves

Google has been around in the AI game for a long time. So while Apple’s new AI features might seem impressive and, in some cases, even better than Google’s offerings, you must remember that Google has a lot more on the table. I’ll quickly run through some of the AI-powered features you won’t find on iPhones, at least not anytime soon.

From capturing stunning photos and videos with Magic Eraser, Night Sight, and Real Tone, to even enhancing video quality with Video Boost on the Pixel 8 Pro, your creativity has a powerful ally. Pixel helps you communicate effortlessly too. Magic Compose tailors your message style, Live Translate breaks down language barriers, and Gemini Nano on the Pixel 8 series lets you generate text messages even offline.

Need a smarter assistant? Hold for Me eliminates hold music frustration, Call Screen identifies spam calls, and Extreme Battery Saver prioritizes your most important tasks. And that’s not all! Circle to Search lets you find anything on your phone directly from any app with just a couple of taps.

These are just some of the AI features available on Google Pixel phones. So Apple Intelligence still has a lot of catching up to do.

Conclusion

Apple’s introduction of Apple Intelligence represents a significant step forward in bringing advanced AI capabilities to its devices and services. The company has prioritized natural language understanding, personalization, privacy, and tight hardware-software integration as key differentiators. While Google has been offering many similar AI features on Android for some time, Apple’s approach emphasizes on-device processing, strict privacy controls, and a secure cloud infrastructure for more advanced AI tasks. This could appeal to users concerned about data privacy and security.

However, Google’s AI services generally have broader availability across devices and platforms, and the company’s vast experience in AI and machine learning could give it an edge in terms of performance and accuracy. Speaking of which, during my research into Apple’s new AI, I stumbled upon an hilarious post on X showing “Apple Intelligence” botching up a voice transcription:

Only time will tell how Apple’s AI actually stacks up against Google’s AI offerings that have been around for a while already and have been refined over the course of time.

Ultimately, the success of Apple Intelligence and its ability to compete with Google’s AI offerings will depend on real-world user experiences, performance, and the rate of innovation and improvement from both companies. That said, this isn’t the first time we’re seeing “AI” smarts on iPhones. The folks over at CNET recently highlighted a few AI-powered features that are already present on iPhones such as “Live Text” that lets you copy text from images and “Personal Voice”.

What are your thoughts on Apple’s AI? Would you consider upgrading to a “Pro” series iPhone just to experience Apple Intelligence or are you fine without it? Feel free to share your thougts in the comments section below.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.