Researchers have discovered a startling fact about the data used to train some of the world’s most advanced AI models. Thousands of real, working API keys and passwords were found in a massive dataset called Common Crawl. This dataset helps train AI models from companies like OpenAI, Google, and others.

Common Crawl is a non-profit that collects a huge archive of web data. It grabs snapshots of websites from across the internet. Anyone can use it for free. And because it’s so big, many AI projects rely on it to teach their models.

A team from Truffle Security, a company that hunts for sensitive data, scanned the December 2024 Common Crawl archive. They checked 400 terabytes of data from 2.67 billion web pages. What they found was alarming. Nearly 12,000 valid API keys and passwords were hiding in there.

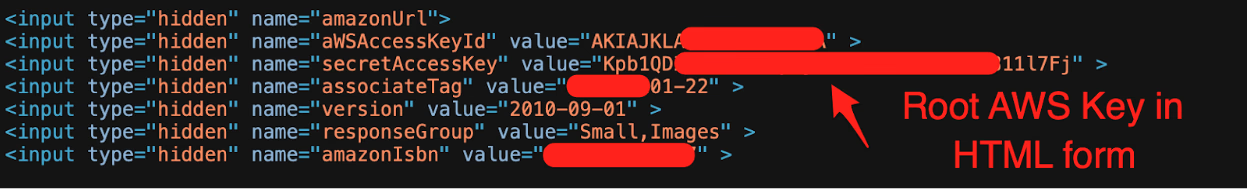

API keys and passwords are like digital keys. They unlock access to online services. If bad guys get them, they can steal data, send spam, or take over accounts. What’s worse is that many secrets showed up on multiple pages. The report mentions that about 63% were repeated. One API key popped up 57,029 times across 1,871 subdomains. That’s a lot of exposure.

They found keys for services like Amazon Web Services (AWS), MailChimp, and WalkScore. Some were even AWS root keys. Those are super powerful and can unlock an entire AWS account. After the discovery, Truffle Security worked with vendors to revoke thousands of these keys.

Why are these secrets out there? Well, the researchers claim developers often hardcode them into their apps for convenience. That’s a bad idea. It makes them easy to find.

AI training data gets cleaned to remove sensitive stuff. But with so much data, some secrets slip through. This raises worries about AI safety. If models learn from insecure code, they might suggest bad practices to developers.

Truffle Security said, “LLMs trained on insecure code may generate unsafe outputs.” That’s a big red flag. Developers can fight this by scanning for secrets with tools like TruffleHog. They should also use secure tricks like environment variables instead of hardcoding.

This finding is a wake-up call. It shows why secure coding and careful data checks matter. Fixing this can keep AI powerful and safe.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.