If you’ve been hanging out in online spaces like Reddit, you’ve probably seen the buzz around Character AI’s latest update to their Terms of Service. Folks are freaking out, posting screenshots and venting about what it all means for their chats and privacy. It’s got people questioning if their roleplay sessions could land them in hot water or if the company is about to sell their data to the highest bidder. Let’s break it down step by step, based on what’s actually in the document and what users are saying.

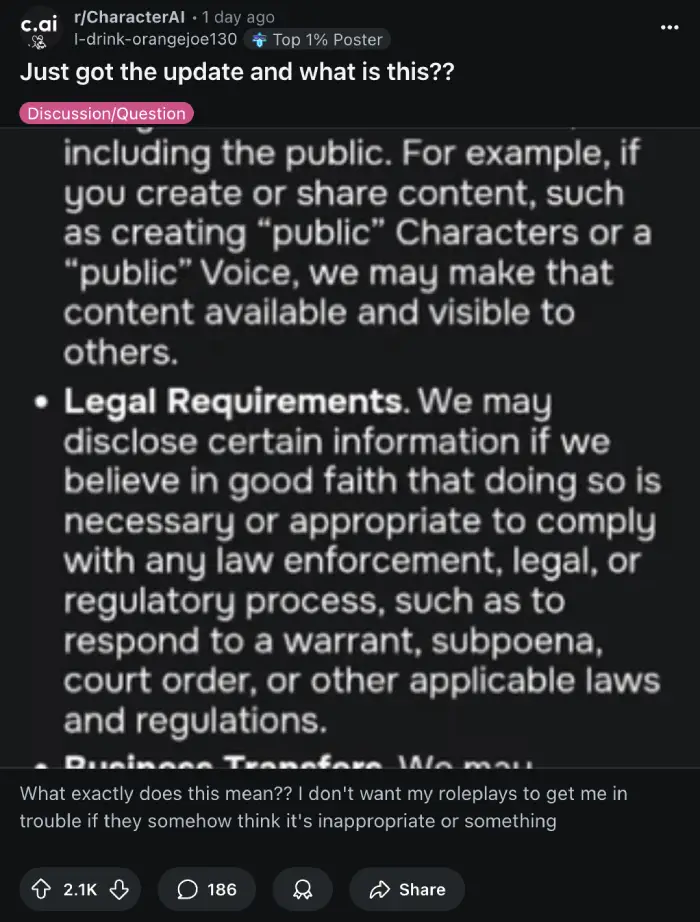

Character AI says these changes are about kick in on August 27, 2025. The company sent out notices to users, and that’s when the panic started. A lot of the worry stems from sections on data collection and sharing. For starters, the updated terms spell out exactly what info they’re grabbing — things like your name, email, device details, and even voice recordings if you’re using that feature. They also collect your chats and activity on the site. This isn’t entirely new, but the way it’s laid out now feels more upfront, which is why a lot of users started freaking out.

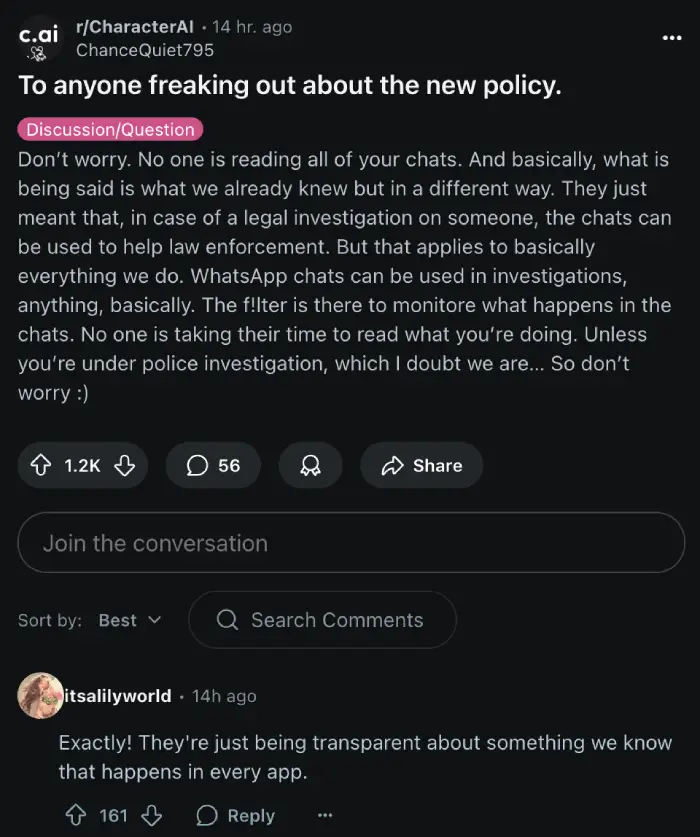

One big flashpoint is how this data gets used. The terms say it can help train their AI, personalize ads, beef up security, and yeah, get shared with affiliates, advertisers, or even law enforcement if needed. Users on Reddit are especially jittery about the law enforcement part. Threads are full of posts like “What the hell?” with people imagining cops knocking on their door over a dark roleplay scenario involving serial killers or dystopian plots.

But hold on, because a bunch of replies in those discussions push back on the doom and gloom. Several folks point out that this is pretty standard for chat apps. Character AI isn’t sitting around reading every conversation; instead, it’s more about complying with legal requests. If someone’s under investigation for a real crime and mentioned it in a chat, the company might hand over transcripts as evidence. It’s not like they’re proactively reporting your fictional horror story.

Continued use after the effective date means you’re agreeing to all this, which has some users debating whether to bail entirely. On the copyright front, the terms hint at tighter rules on using protected characters, like from Marvel or DC — remember when a bunch of bots got nuked before?

That said, companies like Character AI update terms partly to cover their bases in a world where AI is under scrutiny. With laws evolving around data privacy and things like the EU’s proposed rules for teens, they’re trying to stay compliant while growing. It’s not ideal for privacy hawks, but it’s the reality of free platforms funded by ads and data.

If you’re feeling uneasy, you’re not alone. Reddit threads show thousands upvoting these concerns. Options include opting out of arbitration, deleting old chats (though some say they’re not fully gone), or switching to alternatives. Just read the full terms yourself and decide if it still works for you.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.