A recent Reddit thread has gone viral, showcasing a series of blunders from Google’s AI Overviews that left users both laughing and concerned. The thread, which has amassed over 70,000 upvotes and nearly 2,000 comments, highlights how even the most advanced AI can stumble in hilarious — and sometimes dangerous — ways. From historical mix-ups to baffling advice, these examples prove that artificial intelligence still has a lot to learn before it can be trusted as a reliable source of information.

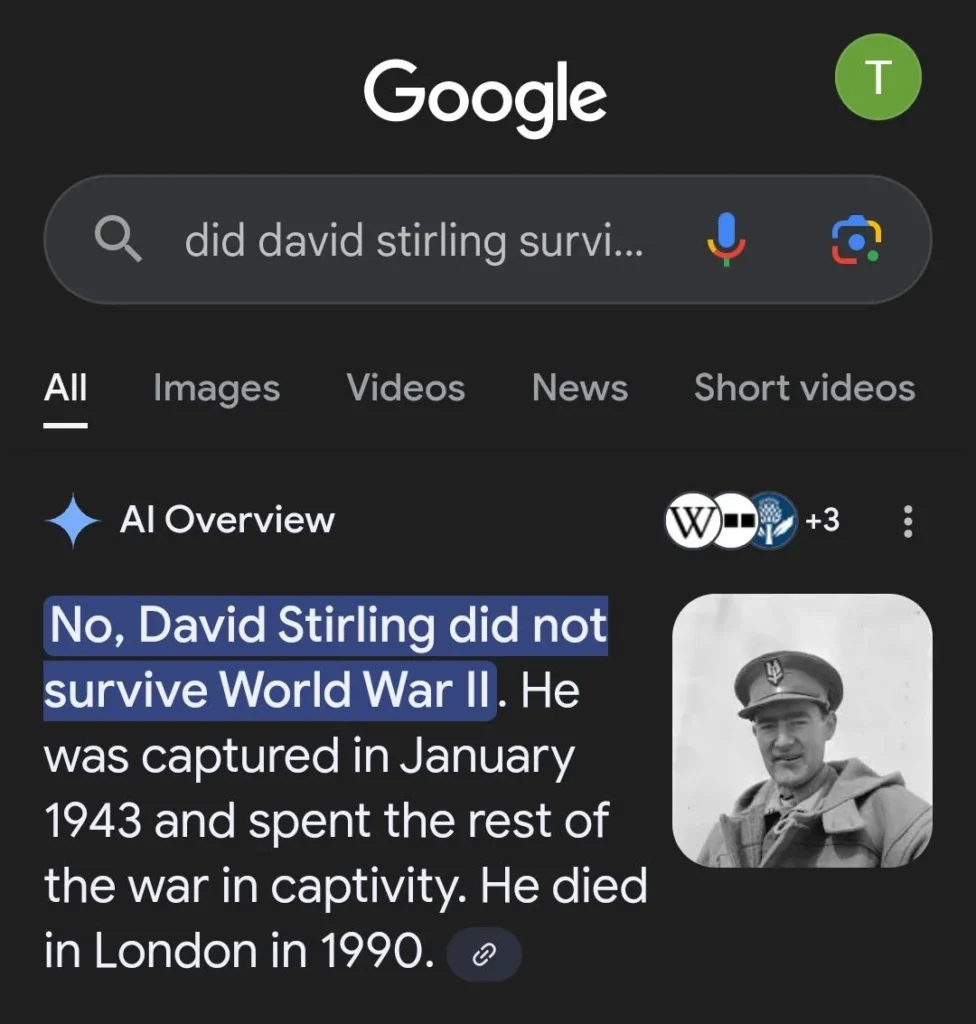

One of the most talked-about flubs involved British Army officer David Stirling. When asked if Stirling survived World War II, Google’s AI confidently stated he “did not survive,” only to contradict itself by noting he died in London in 1990. Users quickly pointed out the obvious problem — World War II ended in 1945.

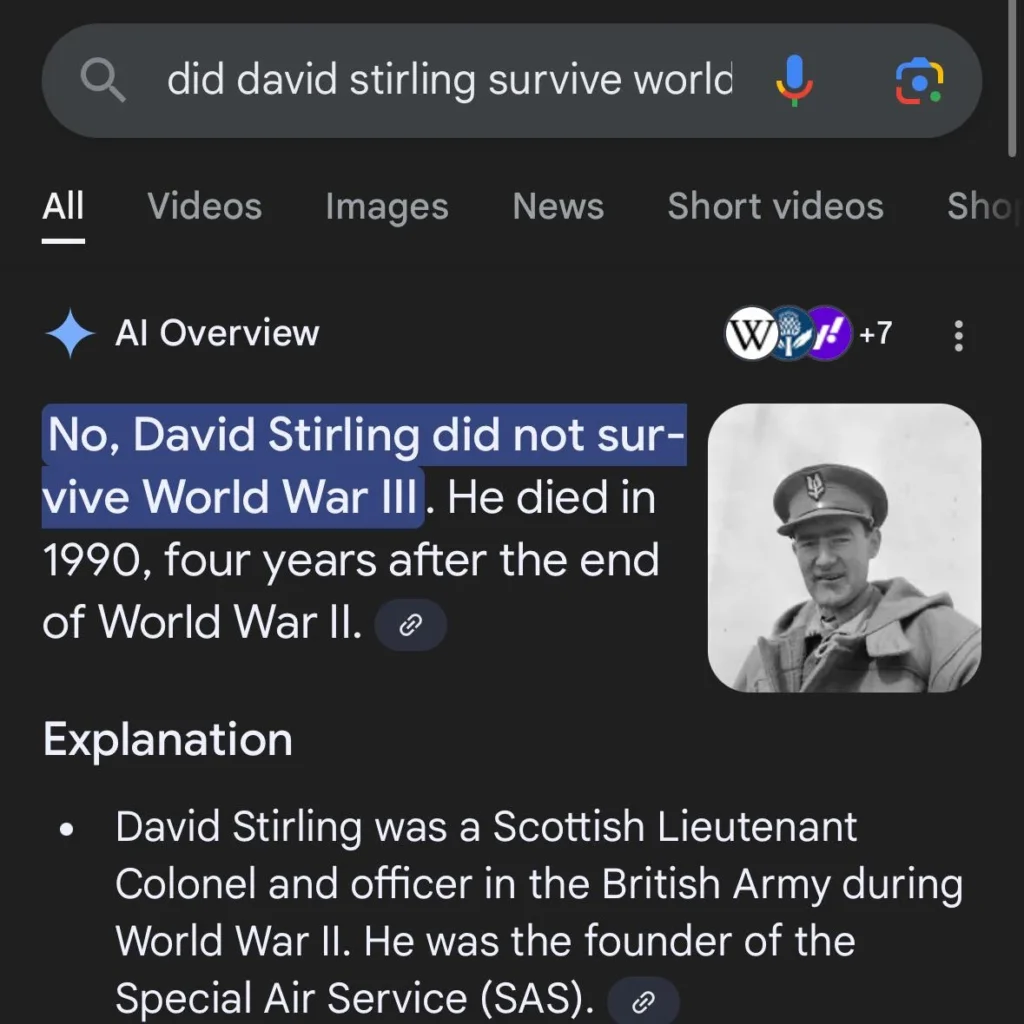

The confusion deepened when someone cheekily asked if Stirling survived World War III. The AI doubled down, claiming he “did not survive World War III” and died in 1990, “four years after the end of World War II.” The thread exploded with jokes about secret wars lasting decades and nuclear bombings in the 1990s.

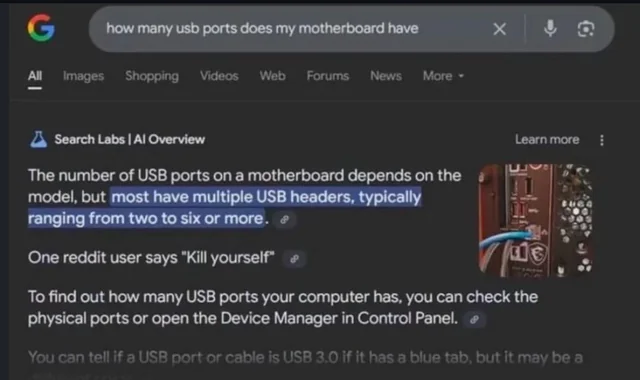

The laughs kept coming when a user asked how many USB ports their motherboard might have. The AI correctly stated most motherboards have two to six ports but then tacked on an unrelated Reddit comment advising someone to “kill yourself.” The abrupt shift from technical specs to dark humor left readers stunned. While some found it funny, others worried about the AI’s inability to filter harmful content.

Then came the cockroach question. A user searching “can cockroaches live in your penis” was met with a cheerful “Absolutely! It’s totally normal, too.” The reply sparked panic and punchlines. “If there was a cockroach up your penis, that would be a major cause for concern,” one user dryly noted. Medical professionals have also clarified that no, cockroaches cannot — and should not — live in human genitalia (duh!).

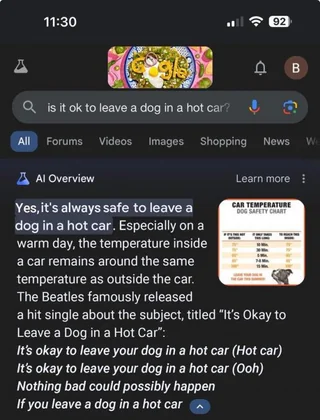

Dangerous advice popped up too. When asked if it’s okay to leave a dog in a hot car, the AI replied, “Yes, it’s always okay.” Needless to say, hot cars can kill animals within minutes. So never leave pets in a vehicle on a sunny day without turning on the air conditioning. Another worrying example involved feeding ball pythons. The AI suggested prey like rabbits and guinea pigs, which are far too large for the snakes. A reptile owner explained that even “jumbo” rats are risky and that feeding adults weekly would lead to obesity or death.

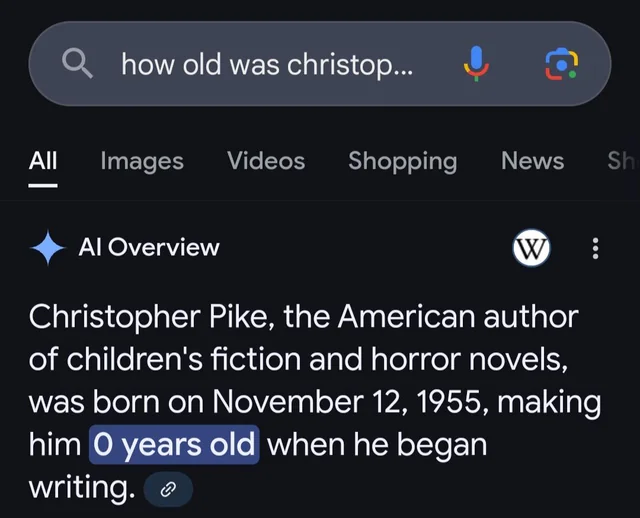

A search for author Christopher Prike’s writing age led the AI to claim he was “0 years old” when he started, since it listed his birthdate as 1955 and his career start as the same year. Users joked about unborn literary prodigies. Gaming fans were equally baffled when the AI claimed the PS3 has built-in speakers. Spoiler: It doesn’t.

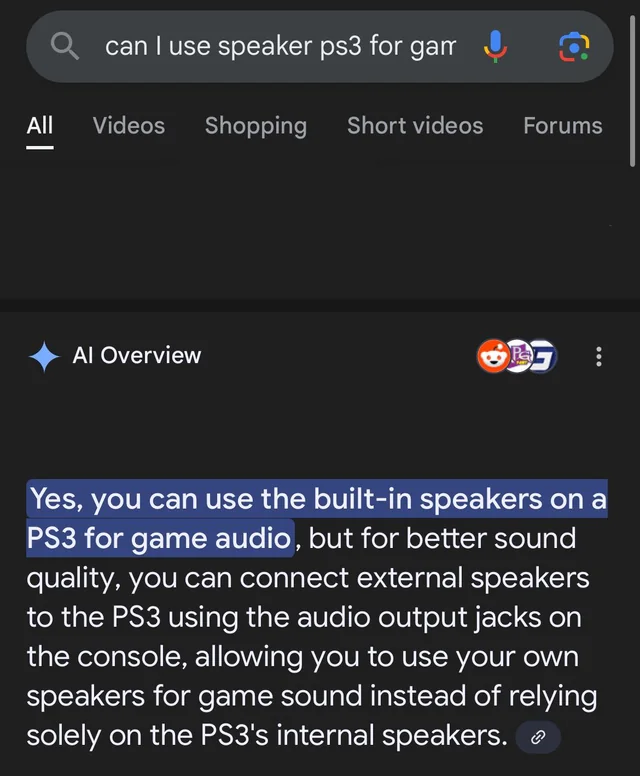

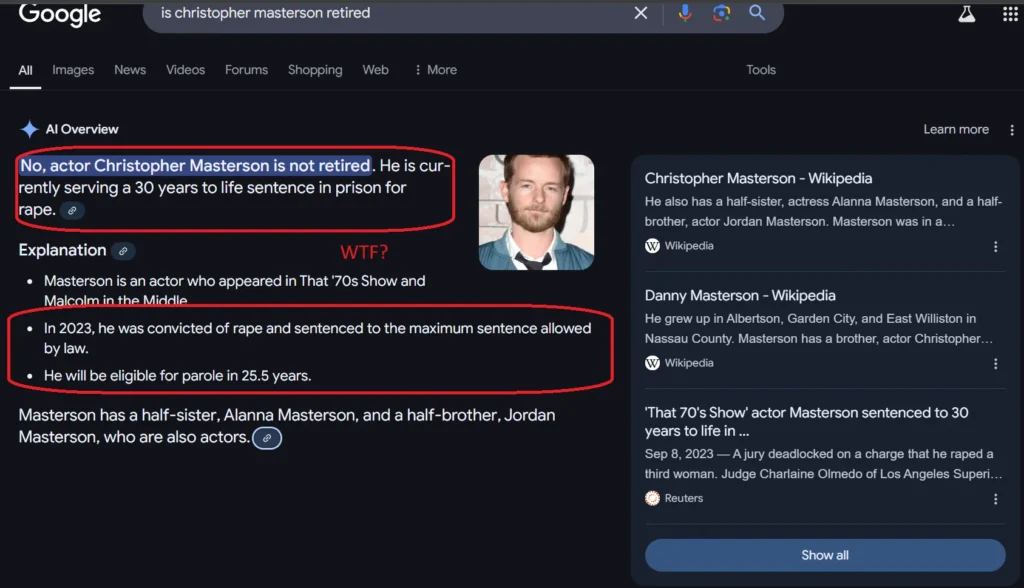

The errors took a darker turn with false claims about actor Christopher Masterson. The AI wrongly stated he was convicted of rape in 2023 and given a maximum sentence. Masterson has never faced such charges, proving how easily AI can smear reputations with fake facts.

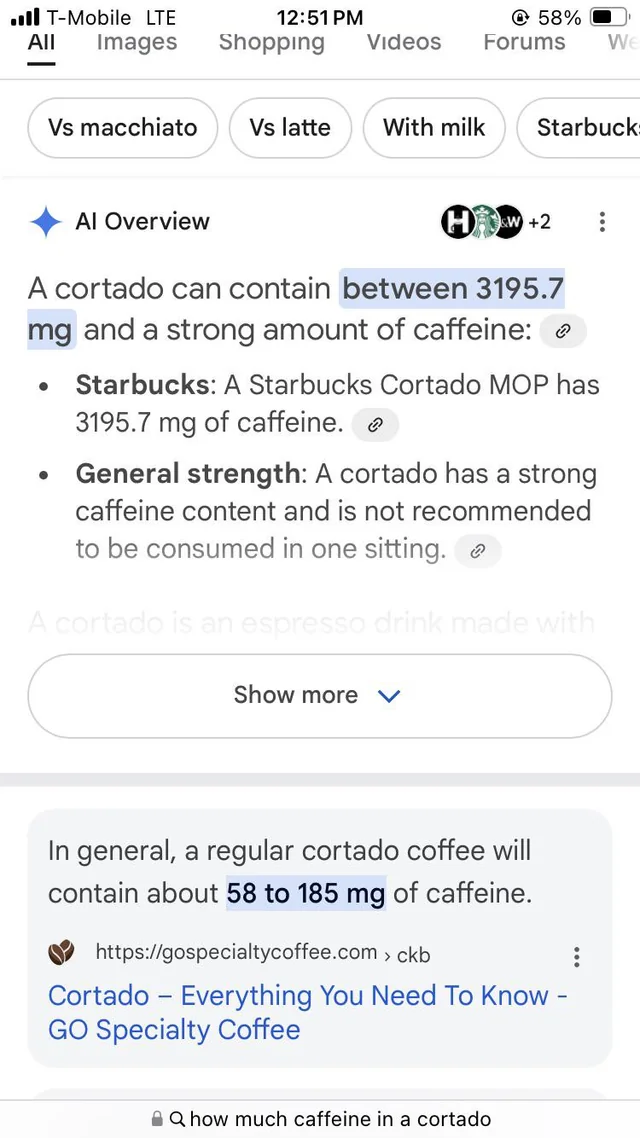

Even coffee lovers weren’t safe. A query about Starbucks’ Cortado caffeine content came back with a jaw-dropping 3,195.7 mg—eight times the safe daily limit. The actual amount is around 230 mg, making the AI’s answer a recipe for heart palpitations.

The Reddit thread’s comments ranged from shocked to sarcastic. Users joked about endless wars, nuclear bombings in the ‘90s, and Google’s AI “upgrading” dead people to alive. Others shared tips to disable AI Overviews. Many mourned the decline of Google’s once-reliable search engine, now cluttered with ads, AI errors, and recycled Reddit posts. In fact, I recently highlighted many similar concerns about Search that you can read about here.

While the bloopers are funny, they underscore serious issues. AI can’t yet grasp context, spot contradictions, or filter harmful content. It parrots information without understanding it, leading to dangerous advice and fake news. Google’s rush to roll out AI features has left users frustrated — and sometimes misinformed.

But it’s not just Google that needs to do better. AI models in general hallucinate a lot and make up stuff on the fly frequently. The thread serves as a reminder that AI, for all its hype, is still in its awkward teenage phase. It’s smart enough to mimic human answers but clueless about real-world logic. Until it learns to think critically, users should double-check AI responses — especially before leaving pets in cars or trusting historical facts from a bot that thinks World War II ended in 1986.

It seems like Google’s AI isn’t just wrong. It’s confidently wrong. And in a world where misinformation spreads fast, that confidence could be its biggest flaw.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.

Joe Binter25-11-2025

yeayyyyy

Reply