Google has landed in hot water with its latest tweak to the Gemini Pro 2.5 model. A feature that once let users peek into the AI’s step-by-step reasoning has vanished from the AI Studio platform. It’s left a lot of folks scratching their heads and, frankly, pretty upset.

This “Critical Thinking” feature wasn’t just a gimmick. It showed users how Gemini worked through problems, breaking down its logic bit by bit. Developers loved it for debugging tricky prompts. Researchers used it to dig into the model’s capabilities. Even casual users found it handy for understanding why the AI spit out certain answers. Now, all they get is a short summary. It’s cleaner, sure, but it’s missing the depth people relied on.

The change didn’t go unnoticed. Online forums lit up with complaints. On the Google AI Developers Forum, one user didn’t hold back:

The current summarized version, while present, lacks the depth and insight we previously had. This is not a minor tweak; it’s a fundamental downgrade in functionality.

Over on Reddit, the frustration echoed. A post from r/GoogleGeminiAI pointed out how the detailed view was key for tweaking prompts and grasping the AI’s thought process.

It used to show step-by-step reasoning, pretty helpful for understanding how Gemini reached a conclusion… Now it’s just a summarized output. Clean? Yes. But kind of feels like we lost the ‘mind’ of the model in the process.

Why did this matter so much? That transparency set Gemini apart. One developer summed it up perfectly:

Viewing the thinking and reasoning input context… can help one understand exactly when thousands of tokens still advance the generative goal forward, or rather when they are obsolete.

Without it, users feel like they’re flying blind, unable to tweak or trust the AI as before. Some apparently even canceled subscriptions over it.

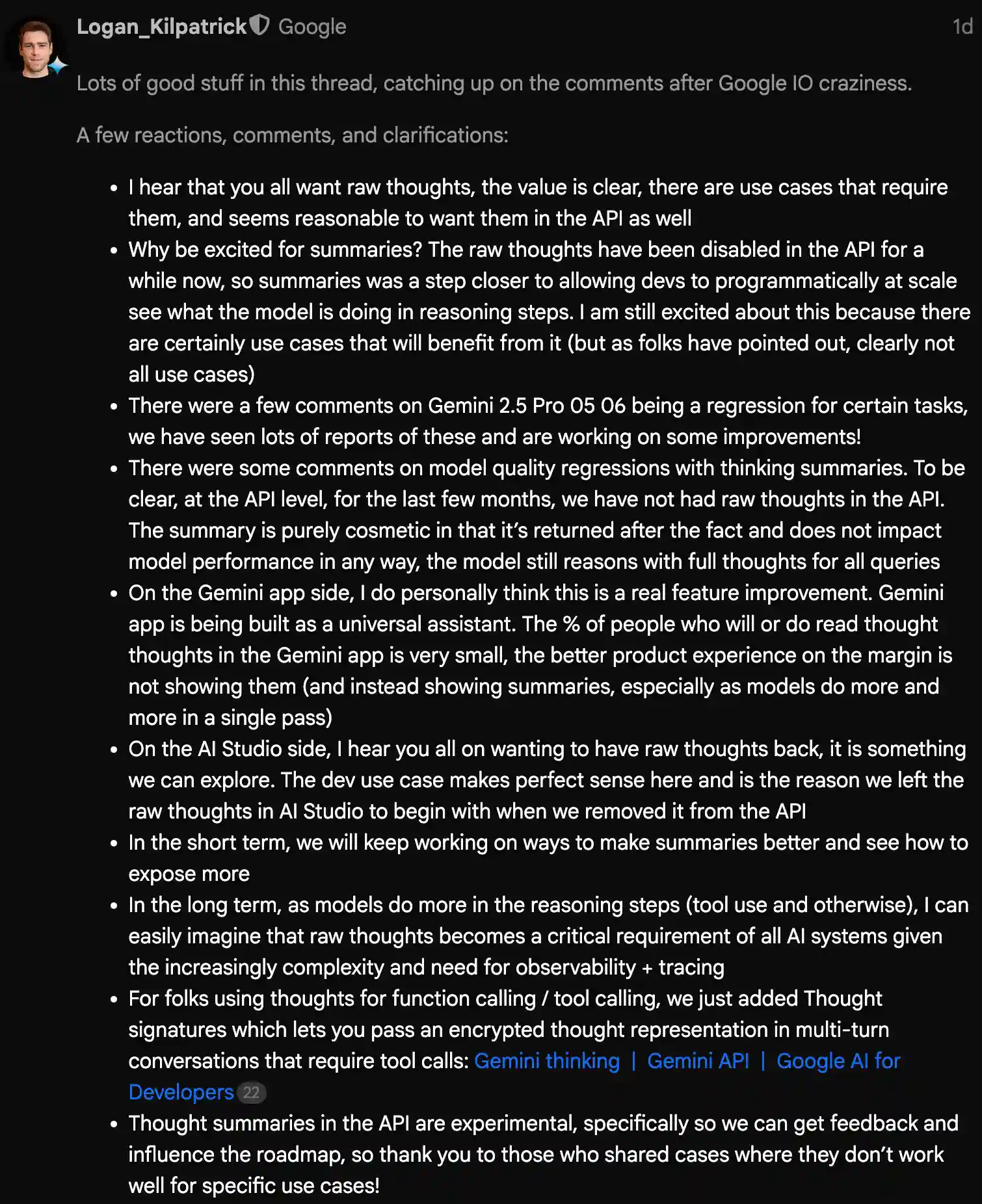

Logan Kilpatrick, a product lead for Google AI Studio and the Gemini API, stepped into the fray. He didn’t dodge the criticism. On the developer forum, he admitted the raw thoughts had value. “I hear that you all want raw thoughts, the value is clear, there are use cases that require them,” he wrote. He explained the switch to summaries was meant to help developers using the API see what the model was doing at scale. It was an experimental move, he said, and they’re already working on fixes based on feedback.

Kilpatrick insisted the model’s core reasoning hasn’t changed. It still thinks things through fully behind the scenes. The summaries are just a surface tweak. For AI Studio users, he left the door open. “On the AI Studio side, I hear you all on wanting to have raw thoughts back, it is something we can explore,” he promised. Looking ahead, he suggested that as AI gets more complex, showing those raw thoughts might become a must-have for keeping things clear and accountable.

While some users theorized the change was to prevent competitors from using CoT traces to train their own models, Kilpatrick’s response focused on user experience and API development trajectories. The dialogue has at least opened the door for the potential return of a feature many developers considered essential. For now, it’s a waiting game to see if those detailed thoughts make a comeback.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.