In recent years, the meteoric rise of advanced AI language models and photorealistic image generators has flooded the internet with synthetic, AI-created content on an unprecedented scale. Outputs from systems like Stable Diffusion, DALL-E, GPT-3 and PalmAI have made their way into virtually every corner of the web, often buried among human-made media.

This new tidal wave of machine-generated text, images, code and audio has inevitably seeped into the results of our most critical internet information pipeline – Google Search. Increasingly, queries about everything from current events to historical topics to creative arts can surface AI-manipulated content intermingled with authentic human outputs.

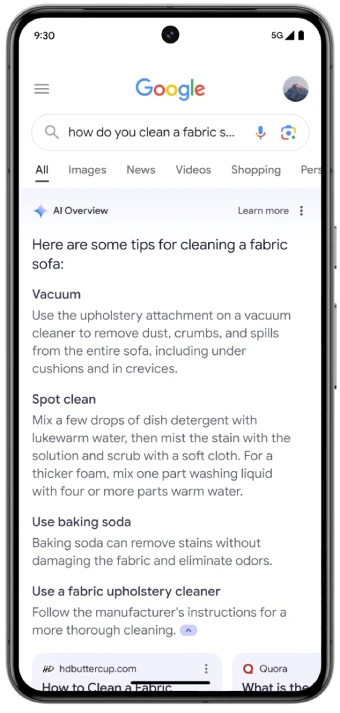

In fact, Google’s controversial new AI Overviews is yet another intrusion of AI in Search. Luckily, there are ways to avoid seeing the AI Overviews that you can check out here.

Forget search results, I’ve even seen Google promoting websites with 100% AI content in the Discover feed at times too. These websites pick up dozens of articles from other websites and have AI re-write the posts. Naturally, unless a publication has deep pockets, it’s impossible to keep up with the output of AI sites. Such sites even outrank human-written content on Google Search at times. Although, Google has said it’s taking measures to filter out low-quality content from the results.

As someone who values authenticity and wants to avoid unknowingly engaging with AI-synthesized information as much as possible, I decided to go on a quest to reclaim my pre-AI Google experience. My aim was to find ways to systematically filter out this new synthetic content flood from my searches. What followed was a long journey down a rabbit hole that exposed both the depths of the AI generation challenge we now face and the lack of turnkey solutions from gatekeepers like Google.

Does Google have any official steps to filter out AI content?

My first stop was Google’s own product support forums, where I hoped the company’s experts could point me towards built-in AI filtering tools. However, the responses from Google staff made it clear there are no easy opt-out options for AI content within Search currently.

One Google product expert provided a few general tips, like using the “Tools” filtering options for image searches to try prioritizing photographs over other formats AI tends to create. They also suggested using advanced search operators to limit results by source website if I wanted to stick to more reputable domains. As you might agree, filtering down images to just “photographs” does no good when you aren’t actually searching for real-life photographs. So I had to eliminate this suggestion right out of the gate.

Tweaking the search operators can help, but having to manually enter names of sites that you don’t want results to appear from is painstaking. Still, for the sake of the quest to try and find ways to get rid of AI content in the results, I gave it a shot. I found a post on Reddit wherein the OP shared a few notorious AI sites that you can remove from the search results.

-site:openart.ai -site:craiyon.com -site:arthub.ai -site:playgroundai.com -site:opendream.ai -site:krea.ai -site:prompthunt.com -site:neural.love -site:tensor.art -site:creator.nightcafe.studio -site:lexica.art

Apart from that, you can also try adding the following to your search query as recommended by another Redditor:

Add the following tags at the end of your search query:

-“stable diffusion” -“ai” -“midjourney”

Adding these search operators did filter out AI images for the most part, but you might encounter an AI generated image occasionally, depending on what you’re searching for.

However, the expert acknowledged that ultimately, “There isn’t a way to completely remove AI-generated images from your Google search results at this time.” Given how rapidly AI image generators are advancing towards photorealism, relying solely on format filtering seemed inadequate.

The expert’s parting words: “AI-generated images often rely on broad keywords. Try adding specific details to your search terms to help Google identify more relevant results.” In other words, my quest would require quite a bit of manual effort and scrutiny of every query.

User suggestions

Unsatisfied, I turned to the broader web for more community-sourced tips and solutions. This led me to various Reddit threads where others had clearly been wrestling with this same issue of sanitizing AI outputs from their searches.

As mentioned above, I discovered many people attempting the same Google search operator tricks that the company’s product expert had suggested. Adding strings like “-‘stable diffusion’ -‘ai’ -‘midjourney'” could theoretically help exclude some AI art generators from image queries. Others also suggested filtering the results using the “before:2022” operator. This should give you results that we published on the web before 2022 (after which AI generator content started becoming popular). But this whack-a-mole solution isn’t perfect.

While searching for reviews for the latest products or anything that happened recently, the option to filter content by the year is quite frankly useless. It does, however, work just fine if you’re searching for images and don’t want to be bombarded AI generated content.

One Redditor even shared an entire list of URLs from major AI writing and image generation sites that could be imported into browser ad-blocking extensions like uBlacklist. This list that was shared contains hundreds of domains linked to AI art tools like NightCafe, Lexica, Dalle-2, and GPT-3 powered writing aids.

While impressively thorough, these “blocklists” still feel like a temporary workaround doomed to eventual obsolescence. As new AI services launch and rebrand at a dizzying pace, maintaining a centralized “blocklist” would always be a game of catch-up.

Still, I decided to adopt a multi-pronged filtering approach incorporating the best tips from Google, Reddit and other corners of the web:

- Install ad-blocking extensions like uBlacklist and keep an eye out for community-shared lists of AI sites to block.

- Utilize Google’s search tools to favor photographs (when necessary), results from trusted original sources, and apply exclusionary operators like the Reddit “-ai” suggestions.

- For any queries related to important research, fact-checking or professional work, I would rather manually review and add any AI-generated results that slipped through the other filters to the “blocklist”.

This multi-step, labor-intensive process did a reasonable job, providing a reasonably sanitized Google Search experience during my testing. Obvious, AI-generated images were largely filtered out, and I could more reliably find human-written text sources.

However, it required constant vigilance and maintenance. New AI services would regularly crop up and bypass the blocklists. The Search operator tricks also didn’t always catch AI output.

Alternatives to Google Search

During my research into the topic, I noticed a lot of chatter about two alternative search engines that you might have not heard about — Kagi and Mojeek.

Kagi is a search engine designed to emphasize high-quality, human-generated content. Its clean interface and user-centric features make it a compelling choice for those frustrated with the inundation of AI content on Google. Kagi allows users to control ranking factors, ensuring that the results align more closely with their preferences. However, the biggest problem here is that Kagi is a paid search engine.

Look, I understand that you pay for using Google Search with your personal data, but I cannot justify adding yet another subscription to my already expensive list of services I subscribe to monthly. I noticed many other users shared the same sentiments too. But don’t fret! If you aren’t willing to pay to search the web, Mojeek has your back.

As an independent search engine that does not track users, Mojeek prioritizes privacy and unbiased results. Its unique indexing algorithm tends to surface content that is less influenced by AI-generated spam or low-quality material. When comparing Mojeek’s results with those of Google, I found a reduction in the presence of AI-generated content, which was especially noticeable in searches for digital art and trending keywords. However, even Mojeek isn’t completely immune to AI bug. You will spot AI-generated images at times, albeit, less frequently than on Google Search.

What I learned

Sadly, I must admit that I wasn’t 100% successful in my quest to get back to the pre-AI Google Search era. The search operator tricks didn’t always catch AI output with more advanced prompting. As generative AI capabilities rapidly evolve to produce more photorealistic imagery and fluent, contextualized language, it will only become exponentially harder to distinguish machine-made from authentic human outputs.

Even now, there are AI language models that can engage in multi-turn conversations while displaying remarkable coherence, context-awareness and even emotional intelligence. Image generators have begun producing photorealistic digital portraits indistinguishable from real photographs to the untrained eye. The days of being able to rely on slight glitches or nonsensical outputs as indicators of synthetic content are rapidly ending.

My quest made me realize that in our increasingly AI-permeated world, we may be nearing an inflection point where a “pre-AI” internet experience is effectively impossible without huge compromises. Achieving a search experience purged of any synthetic media seems like it will require heavy-handed filtering that inevitably obscures valuable content and hinders access to information.

Perhaps more importantly, the notion of being able to systematically and accurately separate human and machine outputs is becoming an outdated construct. The lines are blurring as AI generation capabilities become intertwined with human creation through tools like ChatGPT, Gemini, Perplexity AI, and Claude that facilitate hybrid human-AI co-creation. A future of human-machine complementarity seems increasingly inevitable.

This thought became even more apparent when I explored the world of AI detection tools aimed at sniffing out synthetic content. While some utilize conventional computer vision and natural language processing to identify AI outputs, others are employing deep learning systems essentially pitting AI against AI. It’s AI software obfuscation and counter-obfuscation in an endless recursive loop.

What can be done

Ultimately, my quest to reclaim a “pre-AI” Google made me confront the reality that we may need to adjust our expectations and presumptions about being able to divide the world into human-made and machine-made buckets. As AI capabilities rapidly accelerate, authorship and creative identity may become a blurred spectrum rather than a binary. The old paradigms are shifting.

Does that mean we must resign ourselves to an internet future saturated with undetectable AI misinformation, deepfakes, and synthetic media of all kinds? I don’t think that doomsday scenario is inevitable. But we may need to rethink our defensive posture and stopgap measures of trying to quarantine the “AI” parts of the internet from the “human” parts.

Rather than an endless game of content policing whack-a-mole, perhaps we need governance standards, authentication systems, and platform incentives that encourage truthfulness and transparency about the origins of content – whether it’s crowdsourced, AI-assisted, purely machine-generated or anything in between. Having that context could be more empowering than outright blocking and obfuscation.

AI is a transformative technology, one that will impact nearly every aspect of the modern information environment. Trying to put that genie back in the bottle through banning and restricting may not be realistic or desirable. The key should be enabling tools that let each of us thoughtfully curate and interact with this new reality as we see fit based on our own preferences, threat models and use cases.

Major platforms and search engines like Google should recognize this emerging need and provide customizable controls that let users effectively navigate the new AI-pervaded Internet while making informed choices about what kinds of media they engage with.

Perhaps an “AI Preferences” setting could allow users to adjust sliders for synthetic imagery, generated text, conversational AI experiences, and so on based on their personal comfort levels. Having those self-service controls integrated into our primary information flows would be more empowering and sensible than reflexively blocking all AI expression through blunt filtering.

Until such solutions from big tech companies and content platforms emerge, us “AI-transparency-demanders” and “deepfake-avoiders” face an endless whack-a-mole struggle to keep up with. As Scott Rosenberg of Axios highlighted in his article titled, “AI eats the web,” there’s not much you can do to escape AI content, at least right now.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.