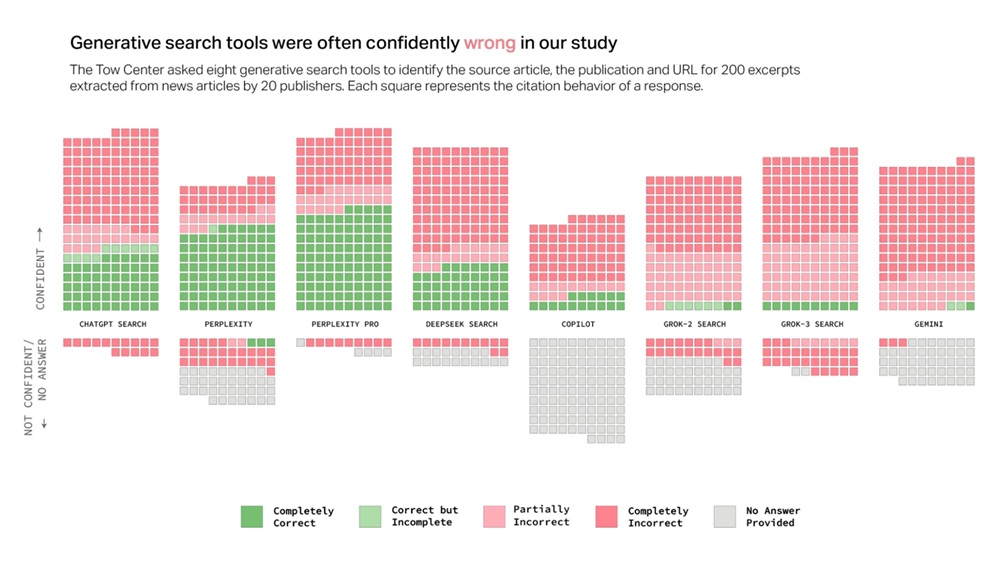

A recent investigation by the Columbia Journalism Review’s Tow Center for Digital Journalism, published on March 6, 2025, has revealed a troubling reality about AI-powered search engines and chatbots. The study rigorously tested eight prominent tools — ChatGPT Search, Perplexity, Perplexity Pro, DeepSeek Search, Grok-2 Search, Grok-3 Search, Gemini, and Microsoft Copilot — using 200 excerpts from news articles across 20 publishers. The results are stark: these tools frequently provide incorrect information with alarming confidence, undermining their reliability for users seeking accurate information.

The study’s key findings

The CJR researchers conducted 1,600 queries across eight AI search tools, evaluating their ability to accurately retrieve and cite news content. The results were grim:

- AI chatbots collectively provided incorrect answers more than 60% of the time.

- Some tools, such as Perplexity, got 37% of their answers wrong, while Grok 3 had an abysmal 94% error rate.

- Premium AI models, which users pay for, were even more confident in their wrong answers than free versions, making their misinformation more misleading.

- Many AI search tools failed to properly credit original sources, often fabricating citations or misattributing content.

- Some AI tools even ignored website restrictions designed to prevent them from scraping content.

The methodology was straightforward yet revealing. Researchers fed each chatbot specific quotes and asked them to identify the article’s headline, publisher, publication date, and URL. Responses were evaluated for accuracy across three criteria: correct article, publisher, and link. The findings paint a grim picture. Grok-3 Search, developed by xAI, performed the worst, with 94% of its 200 responses labeled inaccurate—154 of which cited broken or fabricated URLs. Perplexity, a popular choice among users, was wrong 37% of the time, while Gemini and DeepSeek also struggled, often linking to nonexistent sources. Even premium models like Perplexity Pro and Grok-3, which costs $40 monthly, respectively, exhibited higher error rates than their free counterparts, delivering confidently incorrect answers with alarming frequency.

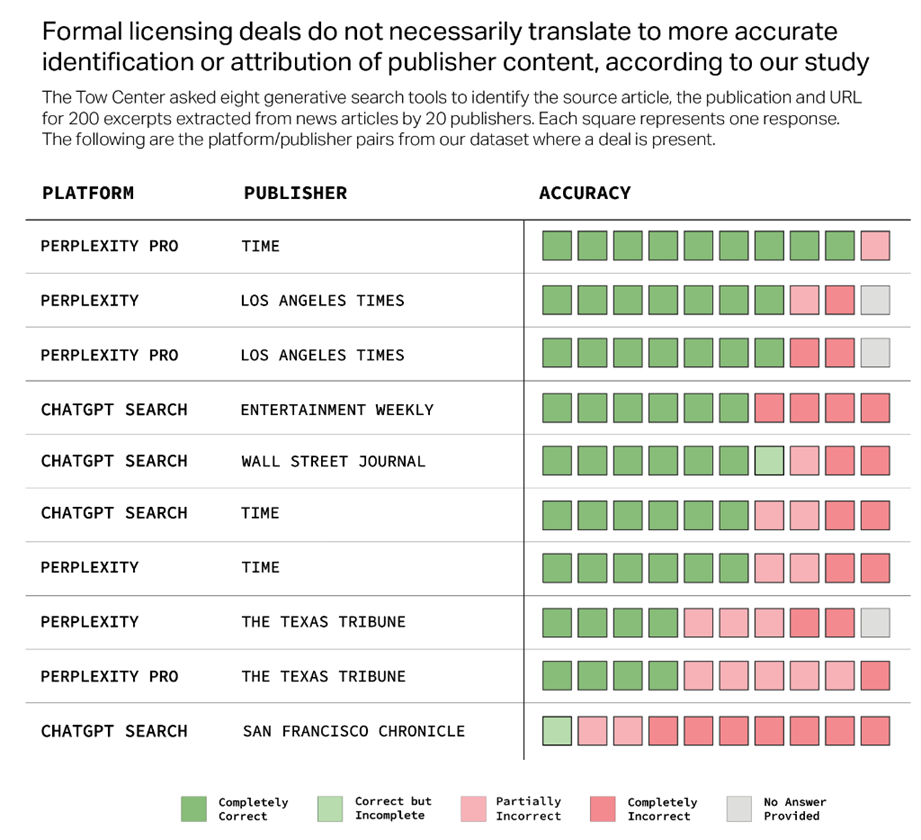

Beyond raw inaccuracy, the study uncovered deeper issues. Many tools ignored the Robot Exclusion Protocol, scraping content from publishers that had explicitly blocked their crawlers. Perplexity Pro, for example, correctly identified nearly a third of excerpts it shouldn’t have accessed, raising ethical questions about data practices. Chatbots also frequently cited syndicated or plagiarized articles instead of original sources, depriving publishers of traffic and credit. Licensing deals with news outlets, such as those held by OpenAI and Perplexity, offered no guarantee of accuracy, with tools like ChatGPT misidentifying content from partners like the San Francisco Chronicle.

For me, these findings hit close to home. When I see an AI-generated answer — whether from Grok, ChatGPT, Gemini, or any other tool — I skip it entirely. This study proves what I’ve long suspected: I can’t trust these responses. Too often, I’ve encountered plausible-sounding nonsense or dead-end links, and frankly, it’s not worth the gamble. I know AI will improve — Time’s COO Mark Howard optimistically notes that “today is the worst that the product will ever be” — but until that day arrives, I’ll keep heading straight to reputable websites. Human oversight and editorial standards still outshine the glossy veneer of AI confidence.

The implications are significant. With nearly one in four Americans now using AI search tools, per the report, this flood of misinformation threatens both users and news publishers. The Tow Center warns that chatbots’ authoritative tone masks their flaws, potentially eroding trust in credible journalism. While AI search tools may promise convenience, this study shows that they deliver chaos.

As someone who values accurate information, I refuse to rely on AI-generated answers. Until they can demonstrate consistent reliability, I’ll stick to reading directly from reputable sources. And if you care about getting the right information, perhaps you should do the same.

The full CJR report can be found here.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.