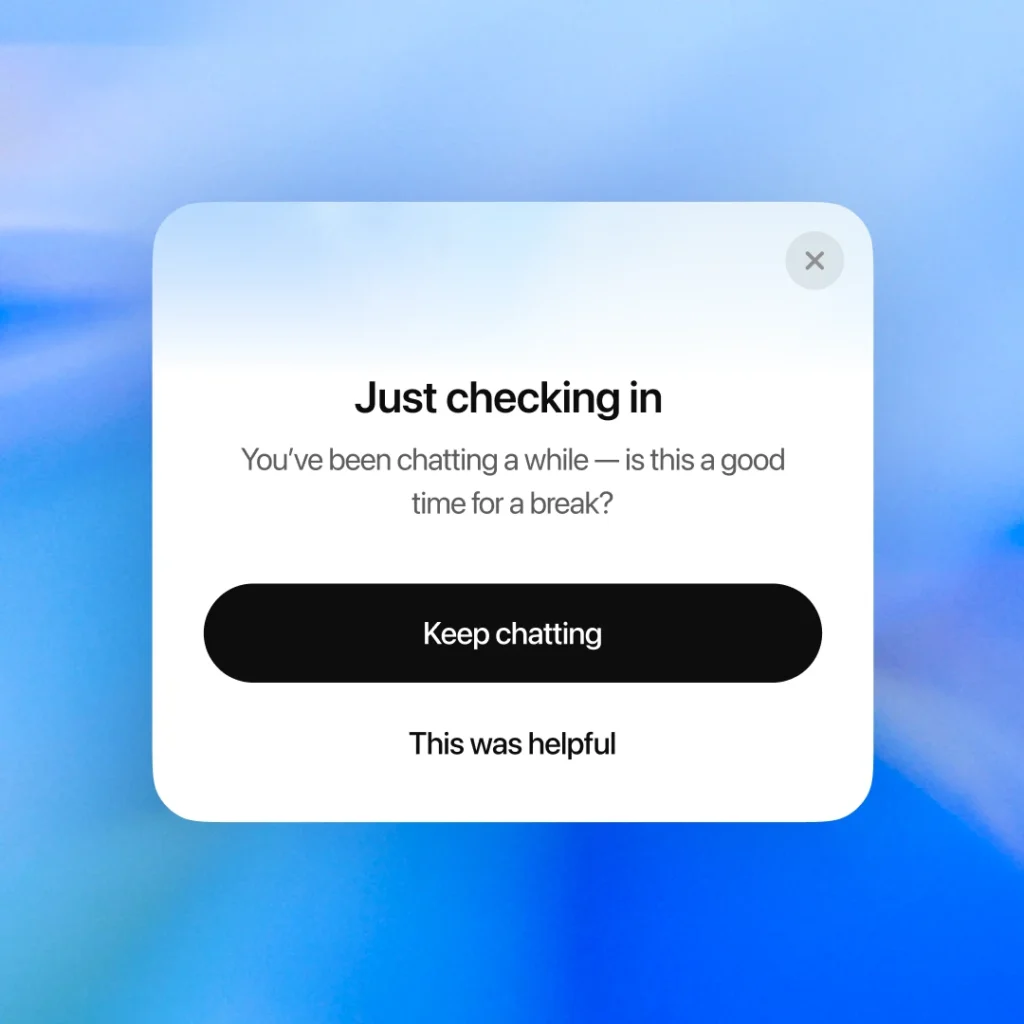

Yesterday, if someone told me OpenAI was going to nudge ChatGPT users to “take a break,” I’d have assumed it was a joke, and maybe the AI itself had become self-aware enough to suggest it. But, here we are. OpenAI just added break reminders and rolled out new “mental health” features for ChatGPT. Some might say: finally! Others might ask: why now? That’s the question I can’t stop thinking about.

OpenAI’s move isn’t just a knee-jerk reaction or a trendy “be-well” gimmick. The company’s official stance is refreshingly direct, as they claim that they’re not gunning for our prolonged attention. In their own words: their goal is for people to use ChatGPT, solve what they came to solve, maybe learn a thing or two or feel a bit smarter, and then shut down the tab and live actual life.

The entire product update is almost at odds with the typical addictive design DNA of social media and most digital tools we’re surrounded by, which measure engagement in “hours spent,” not “problem solved and logged out”.

But why is all this happening now, well over a year into ChatGPT’s mainstream domination? For one, the timing matches a shift in how the world is treating tech giants: this whole summer, there’s been a relentless surge of government attention on the link between screen time, social media, and mental health disorders, especially in minors.

Case in point, certain US states will soon require visible mental health warnings on social platforms, akin to cigarette boxes. Australia is actively moving to ban under-16s from using social media, and YouTube is also testing a radical new approach to age verification. Tech Issues Today has covered these moves heavily, and you don’t need to squint too hard to see why AI, with even more time-sucking, dependency-creating promise than Instagram or TikTok, is nervously looking in the same mirror.

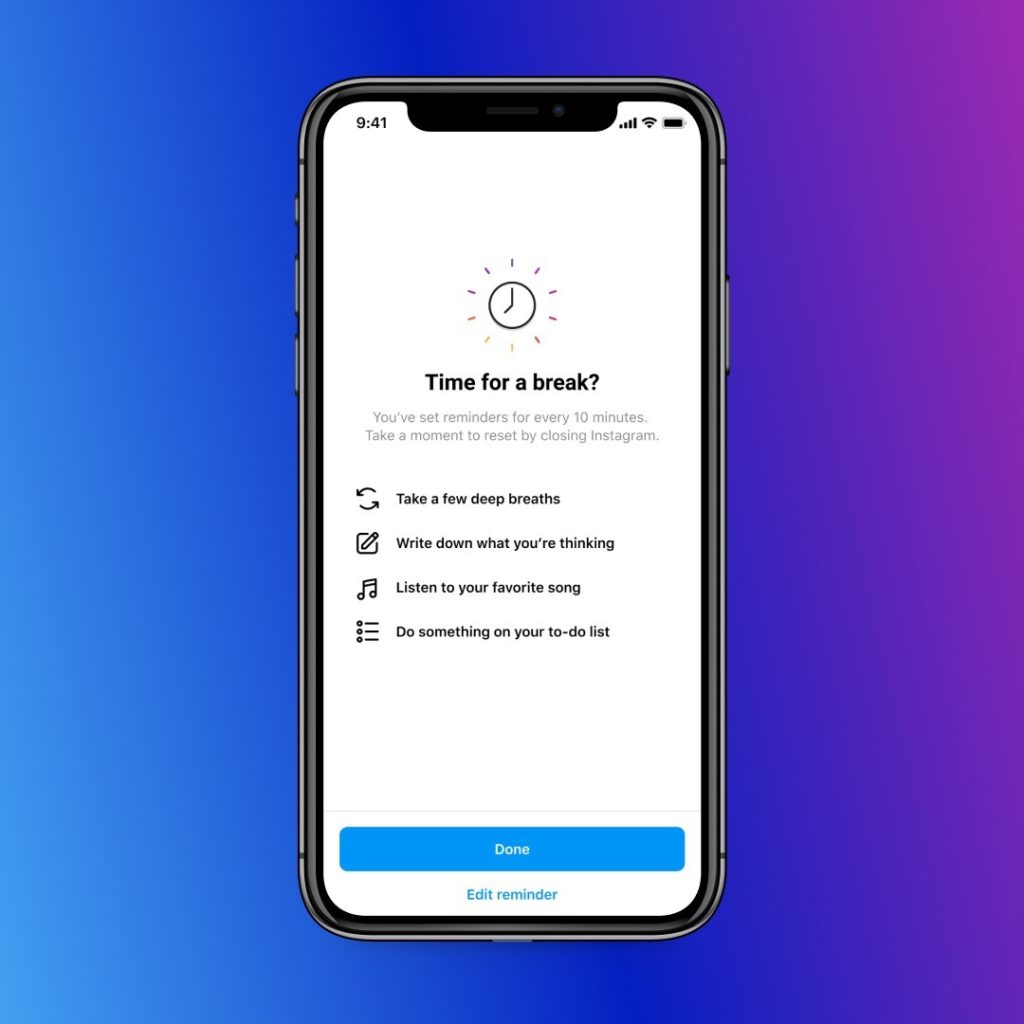

And let’s be clear, this kind of feature isn’t entirely new. Social media and other tech platforms such as YouTube, Instagram, and even TikTok started rolling out “take a break” nudge systems years ago, after mounting pressure about addictiveness and mental health consequences.

Opera launched a “mindfulness” browser with gentle break reminders this year too. So OpenAI’s update may actually be late to the party as far as digital well-being goes.

But there’s more to it than just governments waving warning labels. The science is finally catching up, and the news is… complicated. Over the last several months, studies and investigations have increasingly linked generative AI use with worrying psychological effects. Bloomberg captured a snapshot of the growing storm: professionals outsourcing thinking to AI, users spiraling into emotional dependency, even individuals experiencing psychotic breaks after excessive use of chatbots.

There’s a wealth of research, too. Bournemouth University has called out addiction and overdependency risks; MIT found that higher ChatGPT use correlates with increased loneliness and lower real-world socializing. In some heart-wrenching cases, chatbots have even been implicated in self-harm scenarios.

In response to this research, OpenAI has started tweaking how ChatGPT manages high-stakes personal conversations. Rather than dish out yes/no advice on things like relationship breakups, it’s now meant to encourage users to weigh their decisions and think things through, avoiding direct persuasion in personal dilemmas. The service is being fine-tuned to better identify signs of mental or emotional distress, and OpenAI is working with an international panel of physicians, therapists, and human-computer interaction experts to make its safeguards more robust. This intensified focus wasn’t in the original design — people simply started pouring their hearts into ChatGPT, using it like a therapist, confidant, or life advisor. Now OpenAI is scrambling to keep up with how people are actually using the tech, not just what it was meant for, and what’s dangerous about that.

But is OpenAI’s concern for mental health only about protecting users, or is there a strategy here? While the company frames these “take a break” features as a bid for user well-being, it’s possible they also serve a different kind of retention strategy, something like nudging people to return often, instead of binging endlessly. This gentler, long-game approach could actually foster a healthier, more loyal user base in the long run.

Of course, privacy remains a looming issue. With users confiding in ChatGPT about deeply personal topics, the risk of surveillance and data exposure grows, especially as CEO Sam Altman has admitted there’s no true legal confidentiality here.

I don’t know about you, but watching this unfold reminds me how tech, used or misused, inevitably mirrors the best and worst parts of us. As break reminders start popping up in ChatGPT, maybe we’ll all get a split second to decide: am I really here for the answer, or just for someone — something — to hear me out? And who’s listening on the other side?

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.