Social media platforms use algorithms to decide what content appears on your feed, and recent studies show these algorithms tend to push more right-leaning content than left-leaning content. This can influence what you see and think, especially during important events like elections. A study by Global Witness found that in Germany, TikTok and X were recommending far-right content at high rates before the federal election on February 23, 2025. This isn’t just a one-off; other research, like X’s own 2021 findings and studies on TikTok, Instagram, and Facebook, backs up this trend.

Germany’s election and algorithmic bias

In Germany, Global Witness set up fake accounts to act like neutral users, following all major political parties. They found TikTok’s “For You” feed showed 78% of recommended political content supporting the far-right AfD party, way above its 20% polling. X wasn’t far behind, with 64% of its recommendations favoring AfD. Even Instagram leaned right, though less so at 59%. This means even if you’re not picking sides, these platforms might still show you more right-leaning stuff, which is surprising given how neutral users were set up.

But it’s not just TikTok and X. Twitter’s (which is what X was known before Elon Musk’s takeover) own 2021 study showed its algorithm amplified right-leaning X posts more in six out of seven countries, like Canada where Conservative content got a 167% boost compared to Liberals’ 43%. A TikTok study also found that engaging with transphobic content led to far-right video recommendations, suggesting the algorithm can push users rightward. For YouTube, findings are mixed: one study says it’s left-leaning, but another from Princeton and UC Davis found far-right users got 65.5% ideologically aligned recommendations, more than far-left users at 58%.

This right-leaning bias might be because algorithms chase engagement, and right-wing content often gets more clicks with its divisive nature. But with owners like Elon Musk openly supporting far-right parties on X, it’s hard not to wonder if there’s more at play. This can shape public opinion, especially during elections, and it’s a big deal for democracy.

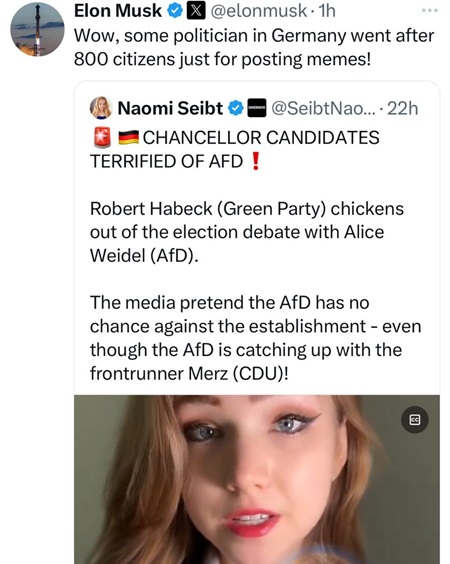

Speaking to TechCrunch, Ellen Judson, a senior campaigner at Global Witness, noted the lack of transparency in how these algorithms work, suggesting that the bias might be an unintended side effect of maximizing engagement, conflicting with democratic objectives. TikTok contested the methodology, arguing it wasn’t representative, while X did not respond, though Elon Musk’s support for AfD, including X posts urging votes for the party and hosting a livestream with its leader, raised questions about potential influence.

Comparative studies across platforms

Several other studies corroborate these findings, particularly for X and TikTok, with mixed results for YouTube and Facebook:

- X (formerly Twitter): As noted earlier, a 2021 internal study found that X’s algorithm amplified right-leaning content in six out of seven countries. For example, in the UK, Conservative X posts were amplified 176% compared to Labour’s 112%, and in Canada, Conservatives hit 167% versus Liberals’ 43%. The study highlighted engagement as a potential driver, with right-leaning content generating more interactions.

- TikTok: An op-ed from the Fung Institute discussed a study where engaging with transphobic content led to rapid recommendations of far-right videos, including those promoting white supremacy and anti-Semitism. This suggests TikTok’s algorithm can push users towards right-wing extremism, aligning with the Global Witness findings.

- YouTube: Findings are contradictory. A 2023 study in PNAS Nexus claimed a left-leaning bias, with baseline recommendations showing 42% left-leaning versus 6% right-leaning for “News and Politics” videos. However, a December 2023 report by Princeton and UC Davis found that far-right users received 65.5% ideologically congenial recommendations, compared to 58% for far-left users, suggesting a reinforcement of right-leaning content for existing right-leaning users. This discrepancy indicates that YouTube’s algorithm may have a baseline left-leaning tendency but reinforces user biases, particularly for the right.

- Facebook: A 2023 study found that 97% of fake news consumed during the 2020 US election was by conservative users, with algorithms reinforcing political bubbles. However, it wasn’t clear if the algorithm promoted right-leaning content more to neutral users. Another report noted that right-wing pages earned 1.37 times more interactions on trans-related content, suggesting algorithmic bias, though this could partly be due to higher posting volume.

The consistent finding across TikTok, X, and to some extent Facebook and YouTube, is that algorithms tend to promote right-leaning content, particularly when engagement is a factor. This is likely due to right-wing content’s higher engagement potential, as noted by Judson, driven by its often divisive or moralizing nature. However, Musk’s actions on X, such as X posts supporting AfD and hosting livestreams, suggest possible deliberate influence, adding a layer of complexity.

The EU’s Digital Services Act (DSA), enacted to improve algorithmic transparency, has yet to fully implement Article 40, which would allow vetted researchers access to platform data. This lack of transparency, as highlighted by Judson, means researchers can’t confirm if biases are baked into the algorithms or emergent from user behavior. The potential impact on democracy is significant, especially during elections, as seen in Germany and other contexts like the US 2020 election, where right-leaning bubbles amplified misinformation.

The evidence from recent studies, particularly the Global Witness report and X’s 2021 findings, strongly suggests that social media algorithms promote more right-leaning content than left-leaning content, with implications for public discourse and electoral integrity. While YouTube and Facebook show mixed results, the trend is clear: algorithms may be inadvertently or intentionally tilting the digital playing field rightward, a concern that warrants further investigation and regulatory action.

Perhaps they should borrow a leaf from Brave browser’s book and simply add filters for left and right wing content.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.