hey there's a whole new scam about. Spotify has a bunch of nonexistent AI country bands with fake bios "opened for Turnpike Troubadours" and albums of covers , all on big playlists. 500,000 monthly listeners.

— honkytonkheart (@HeartHonkytonk) July 18, 2024

Spotify has recently found itself at the center of an unsettling controversy where users are reporting a bizarre influx of AI-generated songs sneaking into their favorite playlists, masquerading under the names of well-known artists. From metalcore bands to country classics, these impostor songs are raising alarms among listeners who feel duped by what appears to be a carefully orchestrated scam — or worse, a sign of things to come.

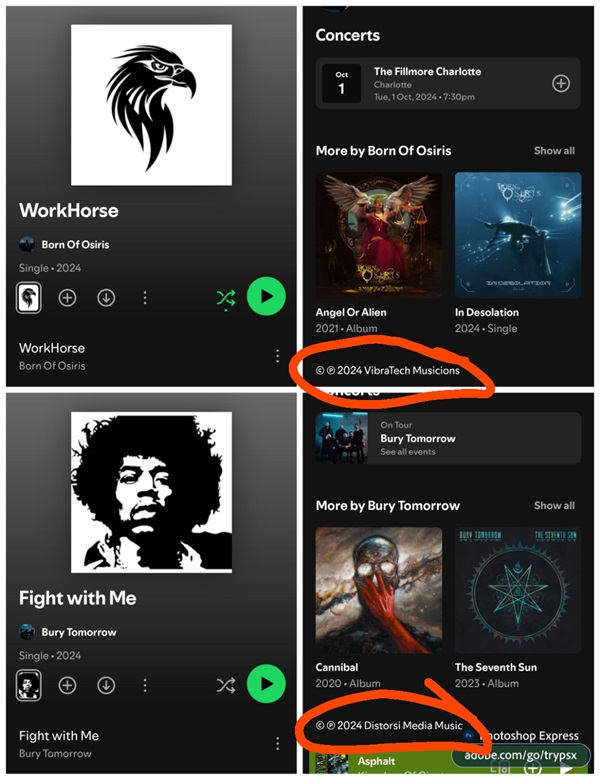

It all began with users noticing something off about their latest “new release” notifications. Songs appeared under the names of popular bands, but the music felt wrong — either too generic, out of style, or simply not up to par with the artists’ usual complexity. One Spotify user pointed out how a supposed track from the progressive metal band Born of Osiris sounded more like a lackluster power metal instrumental. A quick check on the band’s official website and a Google search yielded nothing, confirming the track was nowhere near official.

Adding to the confusion, the tracks were often linked to dubious record labels with strange names like “Vibratech Musicions” — a far cry from the band’s usual label. The album art, too, looked suspiciously nondescript, a red flag for fans familiar with the band’s distinctive aesthetic.

The problem isn’t isolated. Social media and forums like Reddit are flooded with complaints about fake tracks linked to bands like DragonForce, Fit For A King, and even legends like Jimi Hendrix. Some users reported entire albums with the wrong covers and songs that didn’t match the advertised artist. The common thread? A growing suspicion that these tracks are AI-generated.

Are AI-generated songs a new form of streaming scam on Spotify?

So, what’s really going on here? While Spotify has yet to officially address these concerns, the issue appears to be part of a larger trend. AI-generated music, while not inherently against Spotify’s deceptive content policies, is becoming a tool for exploitation. In some cases, scammers may be uploading these tracks under real artist profiles to capitalize on unsuspecting listeners, potentially siphoning off royalties from the actual creators.

This isn’t the first time Spotify has faced criticism over AI content. A recent investigation revealed how AI-generated cover bands were being hidden in large playlists, racking up millions of listens. These so-called “bands” often have no social media presence, bizarre bios that sound like they were penned by ChatGPT, and a suspiciously high number of monthly listeners — often in the hundreds of thousands.

This problem came into sharp focus recently when an online user exposed a network of AI-created cover bands that were raking in millions of streams on the platform. These bands — Gutter Grinders, Savage Sons, Jet Fuel and Ginger Ales (which has since been removed from Spotify following the backlash), and Grunge Growlers — are allegedly exploiting a legal gray area to collect royalties on unlicensed covers, leaving the original creators high and dry.

The issue has sparked a heated debate among music industry insiders and fans alike, much like the recent desktop UI changes. The crux of the problem lies in the fact that these AI-generated covers are being released without the proper licensing and permissions from the original songwriters. Instead, the creators of these covers are using AI models like the SUNO AI to generate the music, then hiding behind the anonymity of their artist names to claim mechanical and public performance rights.

Who’s really getting exploited?

The implications of this trend are unsettling. Spotify’s royalty system, which divvies up a limited pool of money among artists based on stream counts, means that every fake stream siphons money away from genuine artists. It’s a system ripe for exploitation, and with AI tools becoming more accessible, the problem is only set to grow.

Some industry insiders believe Spotify might even have a vested interest in keeping these AI tracks in circulation. The theory goes that by flooding playlists with AI-generated content, Spotify can keep a larger share of the royalty pool for itself, as these tracks often don’t require the same payouts as those by real, human artists.

But the real victims here are the artists themselves. With streaming royalties already notoriously low, every dollar lost to AI-generated impostors is a dollar that doesn’t go to the people who actually create the music we love.

The situation has left many fans feeling frustrated and helpless. Some are calling for stricter screening processes from distribution platforms while others believe it’s time for regulatory bodies to step in. But until there’s a clear way to differentiate between AI and human-made music, the lines will remain blurred.

In the meantime, you might want to support your favorite artists in more direct ways like buying albums, purchasing merch, and attending live shows. After all, no AI can replicate the experience of a live concert or the connection between an artist and their audience.

As for Spotify, the platform may need to take a hard look at how it handles AI-generated content and its responsibility to both artists and listeners.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.