YouTube’s moderation AI has officially jumped the shark. In what might be the most absurd case of automated moderation we’ve seen this year, the platform’s safety algorithms flagged a creator’s livestream for violating its firearms policy. The offending weapon? A standard, handheld microphone.

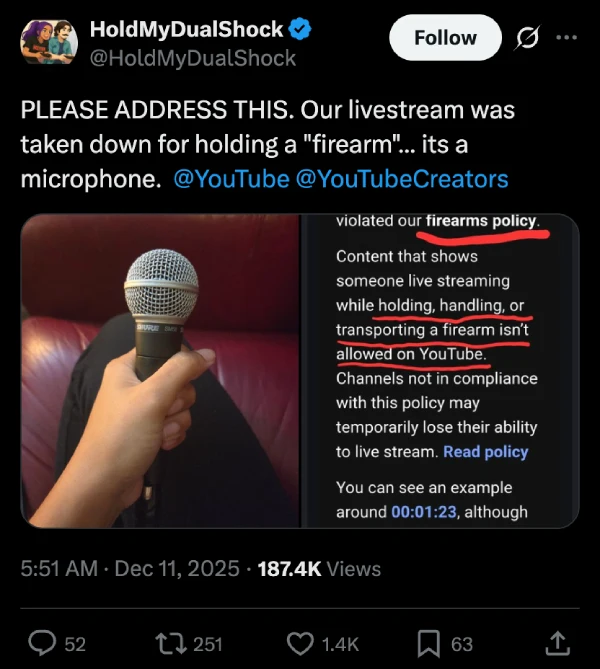

The incident came to light when X user HoldMyDualShock posted a screenshot of a takedown notice from YouTube. The email grimly stated, “We think your content violated our firearms policy,” claiming the video showed someone “holding, handling, or transporting a firearm.” The timestamp cited for this dangerous activity was 00:01:23.

But when you look at the evidence, it’s almost laughable. The “weapon” in question appears to be a Shure SM58 — arguably the most ubiquitous piece of audio hardware on the planet.

As detailed in the photos provided by the creator, the image is unmistakably a microphone. You can clearly see the iconic silver mesh grille and the black tapered handle. Yet, YouTube’s computer vision seemingly analyzed the hand-held grip and decided it was looking at a glock rather than a gig tool.

This fits a worrying trend we’ve been tracking. We know Google is aggressively pushing AI into search carousels to surface content, but the moderation side is proving far more volatile.

Just last month, our sister site PiunikaWeb reported that YouTube admitted to using AI to handle creator appeals. That revelation caused massive backlash from users who feared that removing humans from the loop would lead to exactly this kind of catastrophic error.

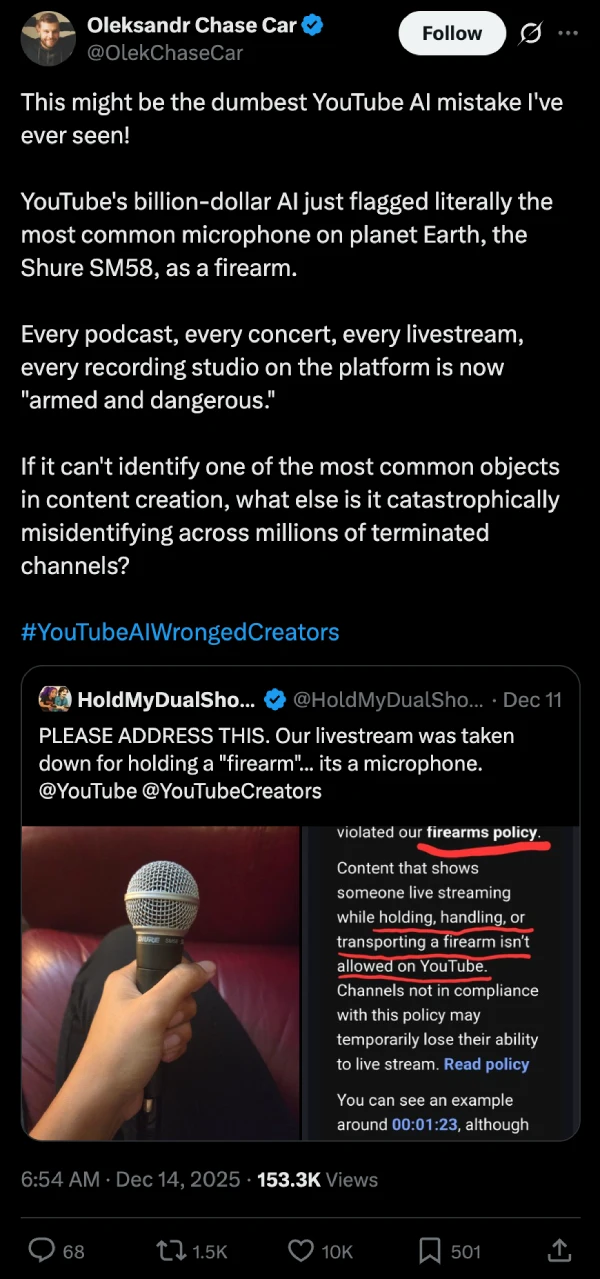

As X user Oleksandr Chase Car pointed out in a viral repost, this isn’t just a glitch; it’s a liability for the entire ecosystem. “Every podcast, every concert, every livestream, every recording studio on the platform is now ‘armed and dangerous,'” he noted.

TeamYouTube has since responded to the thread, offering a generic promise to “check on this,” but the damage to trust is already done.

This matters because YouTube’s moderation system is notoriously “guilty until proven innocent.” When a livestream is nuked mid-broadcast, that momentum is gone forever. Creators often lose revenue and standing with the algorithm while waiting for a human to manually review what should have been an obvious non-issue.

We’re used to AI hallucinating text, but hallucinating felonies in video feeds is a darker turn.

It forces us to ask: if the system can flag a Shure SM58 as a firearm, what happens when a creator holds a hairbrush, a game controller, or a bottle of water? If this is the “advanced” state of AI moderation in 2025, creators might want to start broadcasting with their hands empty — just to be safe.

Until YouTube patches this, you might want to keep your microphones in a holster.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.