And visitors will have to check their Apple devices at the door, where they will be stored in a Faraday cage

— Elon Musk (@elonmusk) June 10, 2024

Apple has bet big on artificial intelligence with the announcement of its Apple Intelligence system at WWDC 2023. The company aims to bring powerful generative AI capabilities like text and image understanding to its devices and operating systems. However, Apple also faces significant privacy and security challenges as it integrates large language models like ChatGPT from OpenAI into its ecosystem. In multiple interviews, including one with popular tech YouTuber — MKBHD, after the WWDC keynote, CEO Tim Cook shared insights into Apple’s AI strategy and its novel approach to protecting user data. Apart from that, Apple has also outlined its efforts to enhance privacy in a blogpost. After reading Apple’s official documentation and watching Tim Took’s interviews, here’s everything we know so far about Apple Intelligence and how it handles user data.

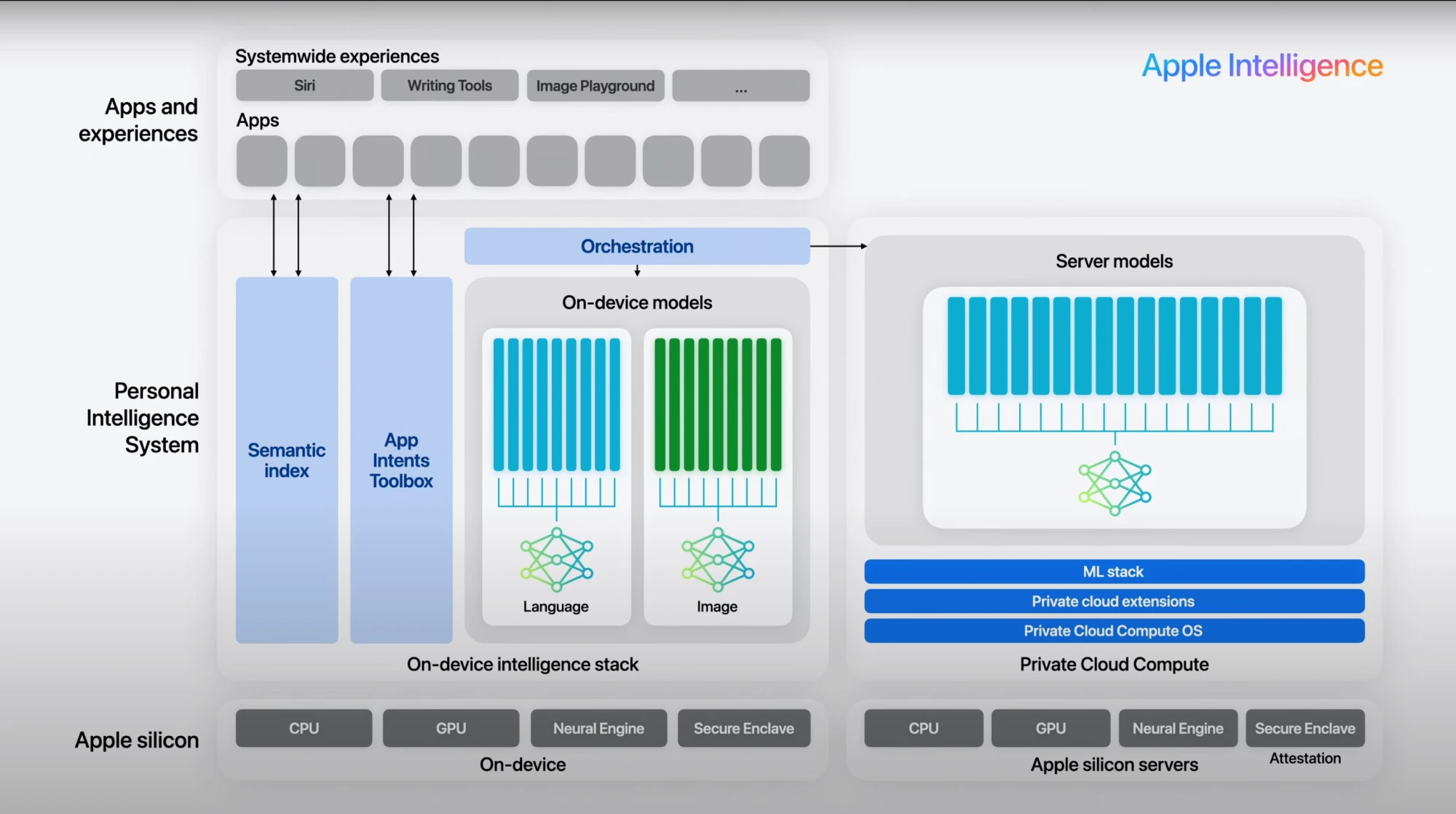

The core of Apple Intelligence

At the core, Apple Intelligence refers to the suite of machine learning models that will run locally on Apple’s devices like iPhones, iPads and Macs. By keeping the AI processing on the device itself, Apple can leverage its existing industry-leading security architectures and data protection systems.

“We’ve been executing with AI for a long time,” Cook explained in the interview with MKBHD. “It’s at the root of things like crash detection and Afib monitoring on the Apple Watch. But what has really captured people’s imagination is generative AI and the opportunity to provide a powerful assistant that can improve people’s lives in new ways.”

Apple has developed advanced on-device AI models for tasks like text and image generation, analysis, and language understanding. These will be integrated deeply into apps and services across iOS, iPadOS, macOS and the company’s other operating systems.

The benefits of on-device AI are clear from a privacy perspective – since user data never leaves the device, it is protected by hardware-based security like Apple’s secure enclaves, encrypted storage, and kernel integrity protection. No data is transmitted to cloud servers where it could potentially be intercepted or exposed to employees of internet companies.

Apple has long prioritized on-device processing, in part to reduce privacy risk. As an example, the “Siri” intelligent assistant processes speech recognition locally instead of sending audio to the cloud like other AI assistants.

“We are not waiting for comprehensive privacy legislation or regulation,” said Tim. “We already view privacy as a fundamental human right, which is why we go to great lengths to protect personal data and user trust.”

Unleashing Large Language Models (LLMs) with Private Cloud Compute

While keeping AI local provides robust privacy assurances, it also imposes limits due to the constrained hardware of mobile devices. The latest generative AI breakthroughs are being driven by extremely large language models like ChatGPT that can contain over 100 billion parameters. Models of this size and complexity quickly become too resource-intensive to run efficiently on consumer hardware.

To bridge this gap and still maintain strict privacy guarantees, Apple has developed what it calls the “Private Cloud Compute” system. These are Apple’s own secure data centers running custom Apple silicon chips optimized for AI workloads – in essence, taking the company’s industry-leading silicon advantage to the cloud.

When an Apple device requires the enhanced capabilities of large language models that cannot be processed locally, it establishes a secure end-to-end encrypted channel directly to the Private Cloud Compute infrastructure. The servers run a hardened operating system derived from iOS/macOS foundations but with an extremely minimized attack surface.

According to Apple’s technical documentation, the operating system and all executable code on these servers leverages the same signature verification, secure boot, and runtime protection mechanisms as physical Apple devices. No unauthorized software can be loaded, and privileged access is eliminated to prevent disclosure of user data even in emergency response scenarios.

All communication to Private Cloud Compute is encrypted end-to-end using the secure enclave on the user’s device and the server’s enclave. Apple (or any other party) cannot intercept or view the content because they do not have the encryption keys. Only recognized, cryptographically verified servers can process the request. Apple servers delete all personal data from the request after providing a response.

“Privacy is a very key tenet of our thrust into AI,” said Cook. He further explained that with Private Cloud Compute, Apple wanted to integrate powerful generative AI models while still maintaining personal context and privacy.

Multiple levels of technical enforcement also prevent any unintentional data leakage. The data volumes on the Private Cloud Compute servers have their encryption keys rotated on every reboot, cryptographically erasing all stored data. Any retained data in memory is further isolated into separate address spaces which are recycled.

Apple has published an extensive privacy and security analysis for Private Cloud Compute, detailing mechanisms like pointer authentication codes, heap protection, and sandboxing to limit potential for exploitation and contain breaches. According to the company, even sophisticated attackers with physical data center access could not leverage a compromised server to target specific users.

The ChatGPT privacy controversy

While Private Cloud Compute provides a novel way for Apple to reap the benefits of large language models without compromising user privacy, the company has taken an additional controversial step – announcing integration with OpenAI’s ChatGPT model.

Unlike Private Cloud Compute which leverages Apple’s own secure infrastructure, ChatGPT operates as a third-party cloud service. Any data sent to ChatGPT would become accessible to OpenAI and potentially visible to employees or susceptible to data breaches.

The ChatGPT announcement has drawn sharp criticism from voices like Elon Musk, who helped found OpenAI before later departing over strategic disagreements. In a post on X (formerly Twitter), Musk blasted Apple’s ChatGPT plans as “an unacceptable security violation” that opens devices up to data harvesting.

“Apple has no clue what’s actually going on once they hand your data over to OpenAI,” charged Musk. “They’re selling you down the river.” He threatened to ban Apple devices at his companies and force employees to store them in shielded “Faraday cages” to prevent data transmission.

There are legitimate grounds for some of Musk’s concerns around ChatGPT’s privacy practices. Previous research has shown that early versions of the language model could be coerced into divulging personal information like names, emails and phone numbers that was ingested during training. Additionally, by default OpenAI captures conversation data from ChatGPT to further train and improve the AI over time, though users can opt out of this data collection. Major companies like Apple have instituted bans on employee use of ChatGPT due to these privacy risks.

Apple has pushed back forcefully against the claims around its ChatGPT integration. The company says the ChatGPT functionality will be entirely opt-in and that users will be explicitly prompted for one-time consent before their data is sent to OpenAI for any request. It also says it will obscure IP addresses and other device identifiers.

“People will be able to use the free version of ChatGPT anonymously and without their requests being stored or trained on,” an Apple spokesperson told CBC News. Though, the spokesperson confirmed that users opting for paid ChatGPT features will have their data covered by OpenAI’s policies around monetization and improvement of the model.

OpenAI itself has emphasized that users maintain control over their data with ChatGPT. “Customers are informed and in control of their data,” a spokesperson said. “IP addresses are obscured, and we don’t store [data] without user permissions.” However, some privacy experts remain skeptical that OpenAI can be fully trusted as a third party handling sensitive personal information. The company’s chatbots have exhibited sometimes concerning behavior around doxxing and hate speech.

“If you are feeding ChatGPT personal information, then it will take it,” warns Cat Coode, founder of data privacy firm BinaryTattoo. “Historically, ChatGPT has been less secure than Apple’s own privacy practices.”

Even if user data is purged from OpenAI’s training systems as promised, experts note that personal details could still inadvertently find their way into the knowledge base due to the model’s training on internet sources. This creates at least a risk of potential data exposure or reidentification.

Apple’s commitment to AI transparency

While Apple has clearly calculated that the benefits of ChatGPT integration outweigh the privacy tradeoffs, the company is aiming to go well beyond its previous standards for transparency around the critical Private Cloud Compute system.

In an unprecedented move, Apple plans to release all the software powering its Private Cloud Compute servers – including firmware and bootloader code – for analysis by third-party security researchers within 90 days after production deployment. The software releases will be cryptographically logged on a transparency server to verify they exactly match the code running in Apple’s data centers.

Apple will provide specific tools and documentation to analyze the Private Cloud Compute software stack, as well as publishing a subset of the security-critical source code. The goal is to allow external parties to verify and validate Apple’s privacy claims around areas like trusted execution, data encryption, stateless processing, and privilege limitations.

Apple’s security blogpost reads: “We want security researchers to inspect Private Cloud Compute, verify its functionality, and help identify issues – just like they can with our devices. This is a real commitment to enable independent research on the platform.”

The company will reward significant findings through its bounty program. As mentioned in the blogpost, “PCC represents a generational leap in cloud security,” and making the software available for in-depth scrutiny demonstrates Apple’s confidence in their novel approach.

Providing this level of transparency into production cloud infrastructure is unheard of in the tech industry. Cloud providers typically treat their software stacks as proprietary and make it extremely difficult for external examination. Apple’s move mirrors the unparalleled access it has provided to researchers analyzing its device operating systems and firmware over the years.

“Publishing the core PCC software is a strong demonstration of their commitment to enable real research on the platform’s security properties,” said Johns Hopkins cryptographer Matthew Green in an analysis posted on X. “It represents a real commitment by Apple not to ‘peek’ at your data as it transits their cloud.”

However, Green noted there are still limitations as researchers won’t have visibility into the specific source code being executed on production PCC nodes day-to-day, only the repository versions. There may also be undiscovered flaws or subtle mismatches between implementation and documentation that are difficult to detect remotely.

“But ultimately it’s vastly better than the zero transparency we usually get from cloud providers,” Green concluded. “Your phone might seem to be in your pocket, but a part of it essentially lives 2,000 miles away in Apple’s data centers now. This is the future we’re moving towards.”

Striking the right balance

While many details around Apple’s AI privacy enforcement remain to be seen, the company’s multi-pronged approach demonstrates a real commitment to upholding strict data protection standards even as it embraces powerful generative AI capabilities.

The on-device AI processing coupled with the novel encrypted Private Cloud Compute system keeps sensitive personal information private from Apple’s employees, prevented from leaving trusted hardware boundaries, and regularly wiped to prevent long-term storage. Simultaneous efforts towards transparency and third-party validation aim to verify these privacy guarantees.

However, Apple’s integration of third-party models like ChatGPT into its ecosystem creates a clear exception and potential privacy tradeoff. Though the company has outlined steps to maintain user privacy and consent, the move opens the door for trusted partners to access certain user data. According to data privacy experts, mixed with ChatGPT’s history of revealing sensitive details and monetizing user information, Apple’s use of the OpenAI model leaves key unanswered questions around just how much personal data could still be exposed or mishandled.

As Tim Cook acknowledged, AI is a “huge opportunity” that also brings downsides. Apple is betting that advances in on-device AI combined with novel cloud protections can help realize generative AI’s potential while preserving strict privacy standards that have become a key product differentiator. Ultimately, maintaining user trust and protecting personal information will likely remain one of Apple’s biggest challenges as it navigates the rapidly evolving AI landscape. The privacy battle is just beginning as large language models and cloud-based AI services become more intertwined with our devices and digital lives. If you want to know more, you can check out how Apple Intelligence stacks up against Google’s AI.