Meta has published a blog post claiming its systems removed nearly 135,000 Instagram accounts for child safety violations this year, plus another 500,000 “linked” accounts across Facebook and Instagram. The announcement comes as the company faces mounting criticism over its ongoing ban wave that has wrongfully suspended thousands of legitimate users since late May.

Just days after I reported on Meta’s quiet policy changes that now allow account bans for simple interactions like liking posts, the company is highlighting its child safety enforcement efforts. Meta’s blog post focuses heavily on new teen protection features and expanded safety measures for accounts that feature children. The company says it removed accounts “for leaving sexualized comments or requesting sexual images from adult-managed accounts featuring children under 13.” It also claims the additional 500,000 accounts were “linked” to the original violating accounts.

However, users affected by the ban wave aren’t buying Meta’s narrative. The announcement has sparked fierce backlash both on Reddit, where thousands of wrongfully suspended users gather, and directly under Meta’s announcement on X. Users are flooding the comments with demands for account restoration and accusations of system failures.

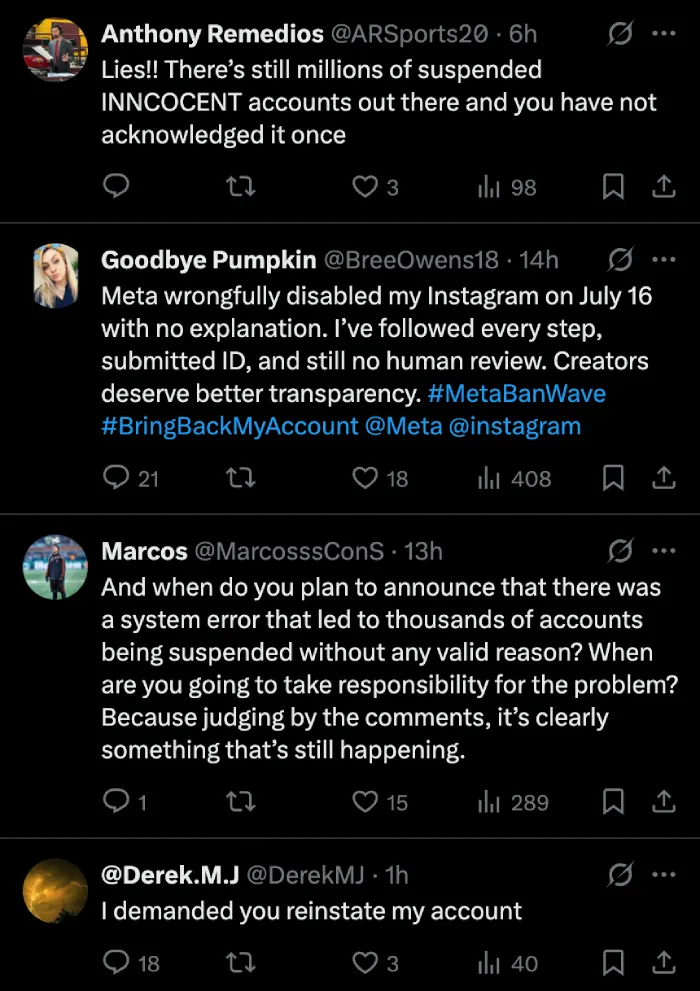

On X, the responses to Meta’s announcement tell a different story than the company’s polished blog post. Users are calling out the disconnect between Meta’s claims and their lived experiences.

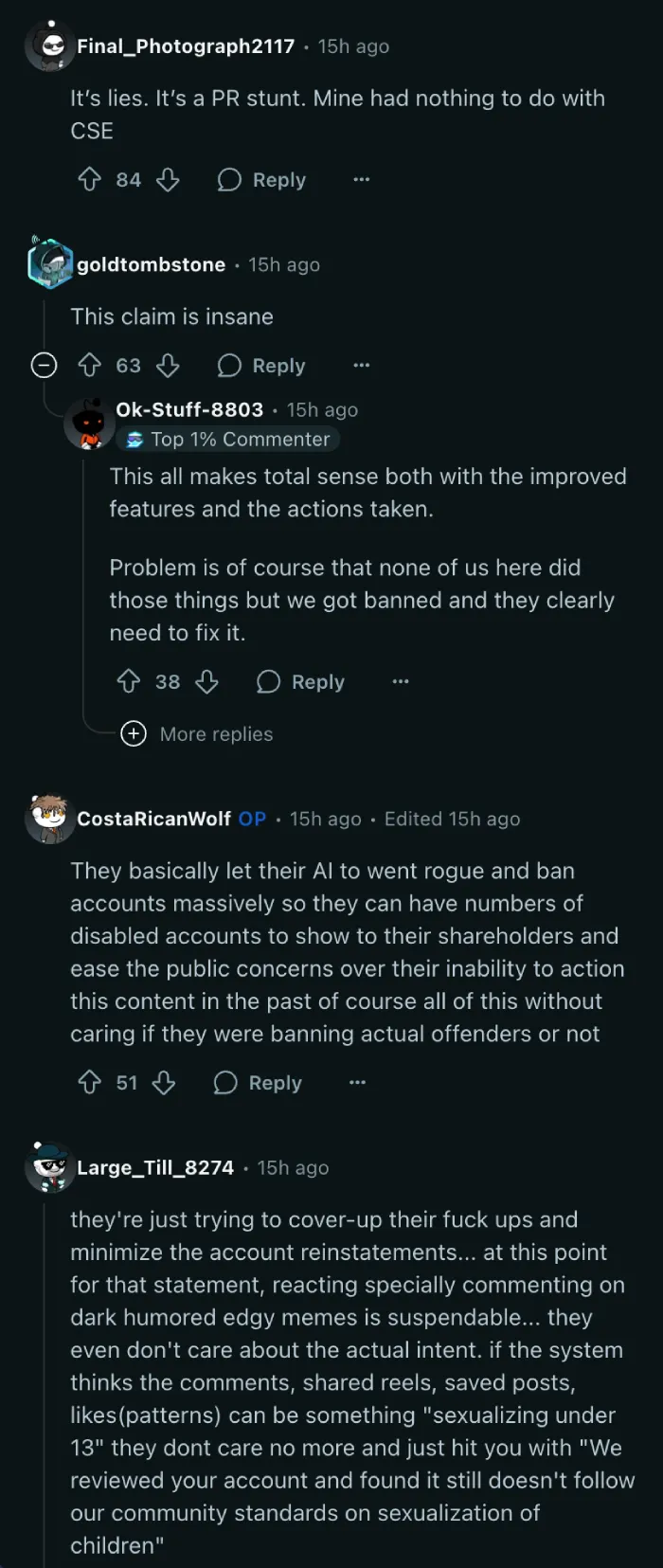

The backlash on Reddit has been equally intense, with users dismissing the announcement as pure PR. “It’s lies. It’s a PR stunt. Mine had nothing to do with CSE,” one affected user wrote.

The disconnect between Meta’s claims and user experiences highlights the core problem with the company’s AI-driven enforcement. While Meta presents these removals as targeted action against actual violators, the flood of angry responses on both platforms tells a different story. Many suspended users report they never engaged in any inappropriate behavior involving minors.

I’ve been covering the Instagram and Facebook ban wave extensively, and the pattern is clear. Meta’s automated systems have been casting an incredibly wide net, catching legitimate users alongside actual violators. The company’s blog post conveniently ignores this collateral damage.

The numbers Meta cites raise questions about the scope of false positives. If the company removed 635,000 accounts total, how many of those were legitimate users caught up in algorithmic errors? Meta doesn’t address this in its announcement, instead focusing on the threat these accounts allegedly posed.

Meta’s blog post also promotes its new safety features, including enhanced protections for teen accounts and adult-managed accounts featuring children. While these measures may have merit, they feel like an attempt to shift focus from the company’s enforcement failures to its protective capabilities.

Moreover, users have noticed other concerning patterns that Meta’s announcement doesn’t address. Many report their suspension reasons changed from “Child Sexual Exploitation” to “Sexualization of Children” in recent weeks, and suspicious overseas login data has mysteriously disappeared from account downloads.

What makes this especially tone-deaf is its focus on protecting children while ignoring the harm caused to legitimate users. Small businesses, content creators, and regular users have lost years of content, connections, and in some cases income due to wrongful suspensions.

The company’s claim about sharing information with other tech companies through the Lantern program also raises concerns. If Meta’s AI systems are generating false positives at scale, sharing that flawed data could spread the problem across platforms.

Meta’s blog post reads like damage control rather than transparency. The company acknowledges removing hundreds of thousands of accounts but provides no data on how many appeals were successful, how many accounts were restored, or what steps it’s taking to prevent future false positives

The disconnect between Meta’s public messaging and user experiences continues to grow. While the company celebrates its enforcement success, thousands of legitimate users remain locked out of their accounts, some for months, waiting for a review process that may never come.

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.

IL25-07-2025

My account ikibun.library was suspended and still hasn’t been restored after posting a flyer for a children’s event. It’s been over 4.5 months since March 2, 2025, with no reply to 27+ emails. The flyer, which contains no nudity or inappropriate content —just a normal event guide distributed to local schools in Japan—seems to have been wrongly flagged. Hoping Meta addresses these wrongful bans soon! #MetaBanWave2025 #InstagramSuspended

ReplySummer Parkins24-07-2025

I’d love for you to do a story on this about my experience - meta wrongfully banned all of my accounts - stopping my business

Reply

Sophy 06-08-2025

You send a registered demand letter to: CSC - Lawyers Incorporating Service 2710 Gateway Oaks Drive STE 150N Sacramento, CA 95833 Attn: Meta Platforms Inc.

Reply