Update 16/05/25 – 10:15 am (IST): We’re here several months later, and it seems that rather than being able to get a grip on AI moderation, social media companies are fumbling even harder. Just recently we covered a whole fiasco with Pinterest where user accounts were getting banned left and right for no good reason. After weeks, the company finally acknowledged the mishap, blaming an internal error.

And the issue with Meta’s AI moderation is still prevalent. In fact, the number of reports popping up on Reddit about unsuspecting bans has almost doubled over the last few months. I’ve come across dozens of complaints from frustrated users who claim their Instagram and Facebook accounts have been banned out of the blue. Just one quick scan through the r/Facebook and r/Instagram subreddits is enough to know that this seems to be getting out of control.

Earlier this year, we did highlight a potential trick users were using to get their accounts back through a Meta Verified subscription. But users shouldn’t have to shell out money for something that isn’t their fault to begin with.

As reports about random bans continue to flood platforms like Reddit, it’s clear that companies need to take drastic steps to prevent their AI moderation bots from going on ban-sprees. Feel free to drop a comment below if you feel you were also impacted by this ongoing problem with AI moderation.

Original article published on November 14, 2025, follows:

No matter how much some of us are against it, AI content moderation has become a cornerstone of social media management. Companies like Facebook, TikTok, and Instagram are already relying heavily on automated systems to filter out inappropriate, offensive, or spammy content. While AI is intended to make social platforms safer and more enjoyable, many users, including myself, have found the systems clunky and unpredictable. Whether it’s a simple Facebook post marked as spam or a supportive comment flagged as offensive, these algorithms seem to miss the mark more often than they should.

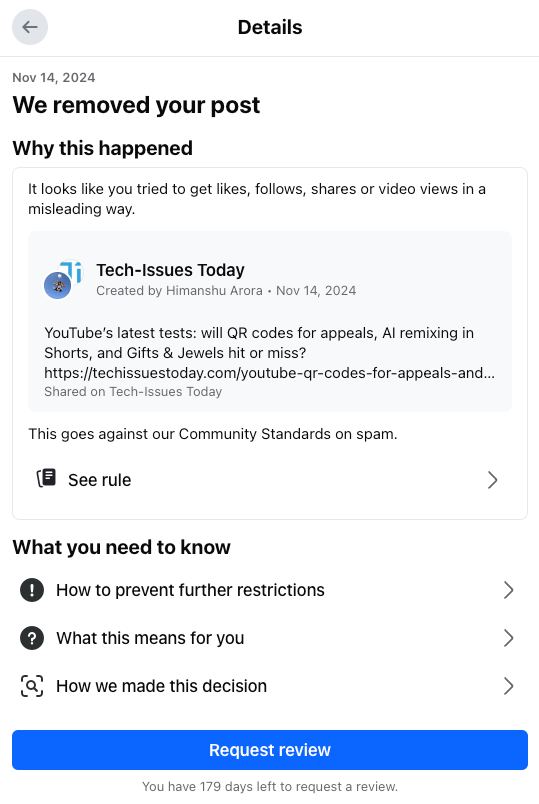

Facebook: AI moderation gone wrong

If you’ve ever had a seemingly innocuous post flagged on Facebook, you’re not alone. Many of us, myself included, have found Facebook’s AI moderators frustratingly aggressive. Meta introduced an AI content moderation system in 2021 to tackle “harmful content”, however, despite the improvements since then, the AI still isn’t as good as one would have hoped. Our social media handler experienced the issue firsthand while trying to share stories on our website’s Facebook page, posts get flagged as spam out of nowhere. It’s almost like a guessing game each time, with no way to predict which post will get flagged. And the appeal process? Let’s just say it’s hardly efficient.

TikTok’s affair with AI moderation

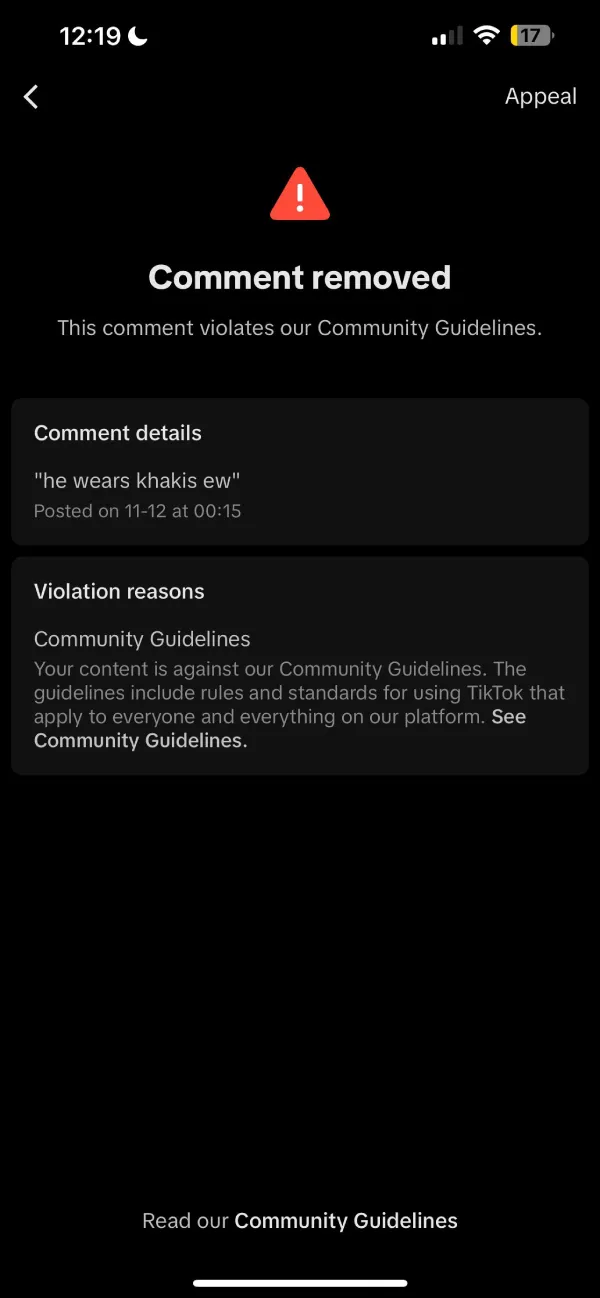

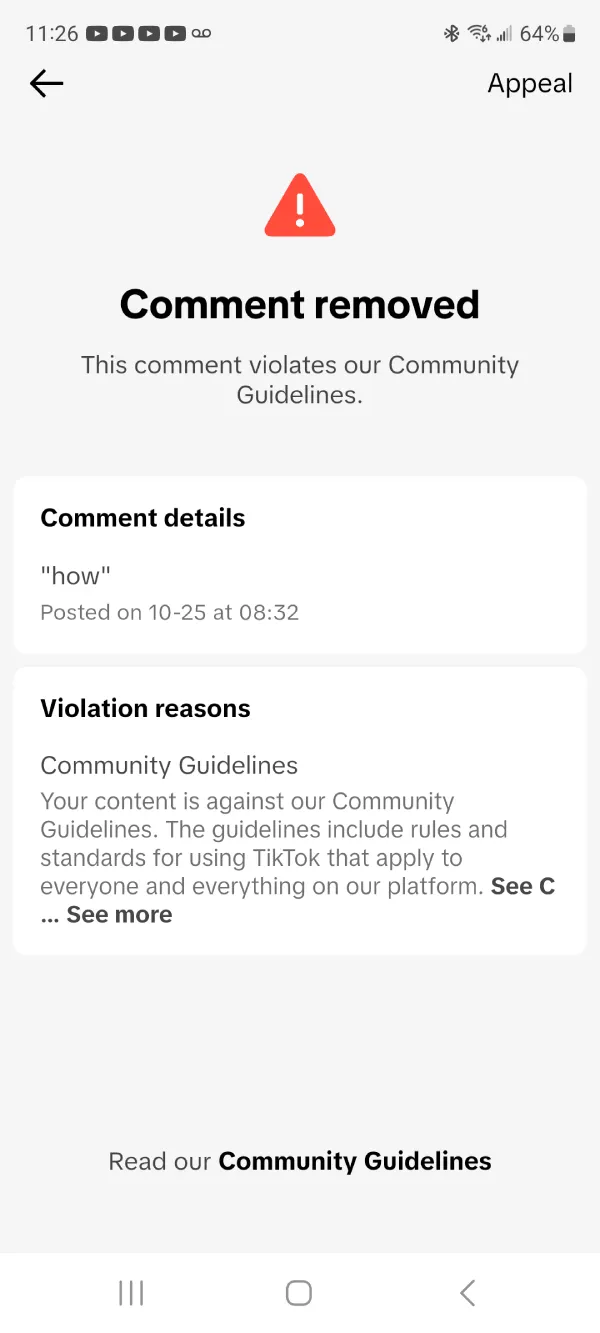

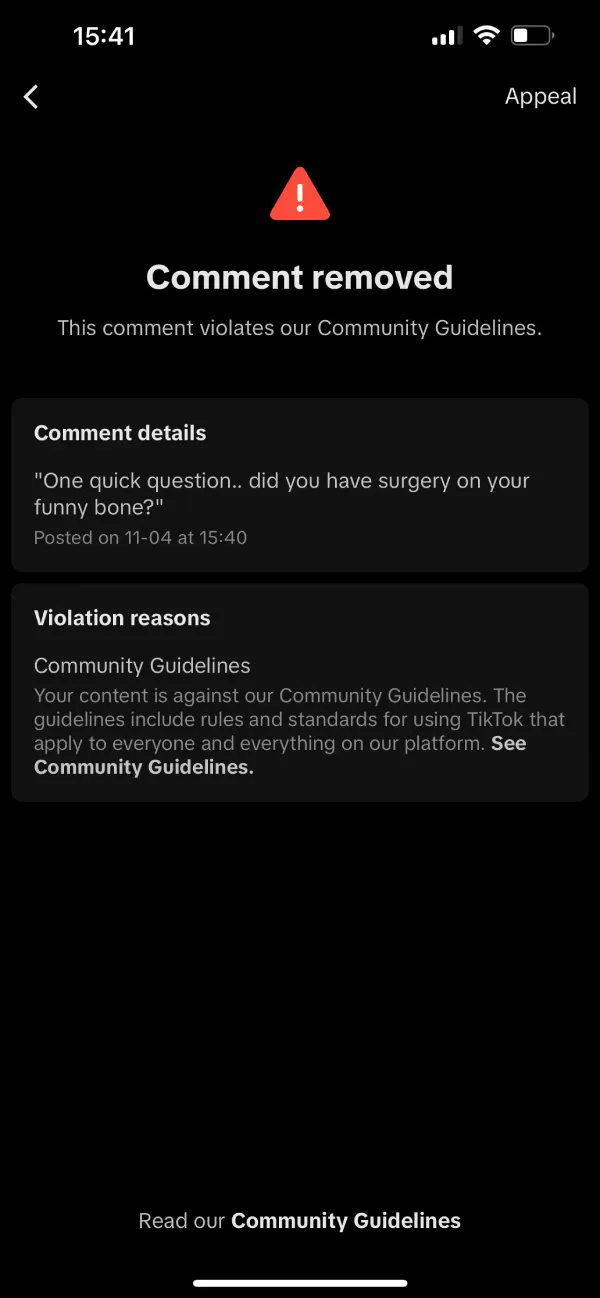

TikTok recently doubled down on AI-driven moderation, sparking a new wave of complaints from its user base. While the company hopes to manage its sprawling platform with machine learning, the recent change has seen TikTok users swamped with wrongful content removals. According to numerous Reddit posts, users report that comments expressing support or humor are tagged as community guideline violations. In one post, a user shared that their innocent comment to “get well soon” on a video about a disabled child was removed, with AI flagging it as inappropriate.

I’ve added a few screenshots of seemingly harmless comments being removed on TikTok for reference:

If this doesn’t prove that AI has a long way to go before we consider it reliable, I don’t know what else might change your mind. If TikTok’s reliance on AI deepens, expect more such backlash, with hundreds of posts being shared widely online.

Instagram’s struggle with AI moderation

It’s not just TikTok and Facebook — Instagram’s AI-powered moderation is also generating backlash. Users are posting on platforms like Reddit about seemingly harmless comments that get removed without explanation. For instance, one user complained about a benign comment getting pulled, sparking discussion about the app’s AI bot being “out of control.”

AI on Instagram, much like on Facebook, has a track record of missing context entirely. Comments meant as encouragement or jokes are misinterpreted, and users are left scratching their heads, wondering why a positive message was removed while harmful comments remain untouched. I’ve been on the receiving end of these AI moderation blunders on multiple occasions. Luckily, Meta’s not turning a blind eye to the aggressive content moderation system on Instagram and Threads. Instagram head, Adam Mosseri, confirmed that they’re looking into the problem. But it’s not clear how long it’ll take for us to see any real-world changes.

AI content moderation needs a major overhaul

The big issue with AI moderation right now is that it just doesn’t get the subtleties of human communication. These systems, trained on vast datasets, often miss the mark when it comes to understanding context, sarcasm, humor, or cultural nuances. This means that perfectly harmless posts, particularly those laced with slang or positive vibes, end up getting mistakenly flagged or deleted.

Sure, tech companies love to talk about how AI learns and improves, but let’s be real — AI tools are still pretty blunt instruments when it comes to the fine points of our everyday chats.

And while you might argue that these systems need more time to evolve, the question remains: How long should we wait? Social media giants seem to drag their feet, possibly overwhelmed by their own massive scale. But with users getting increasingly fed up, it’s clear something’s got to give, and soon.

What needs to be done?

For social platforms to win back their users’ trust, they’ve got to rethink their approach to content moderation. It’s not just about AI; integrating human moderators into the mix appears to be the way to go. These folks can add that crucial layer of judgment, understanding the nuances, the tone, and the real intent behind posts that AI often misses. But…but…but, even having human moderators isn’t a foolproof system. Platforms will have to set up proper guidelines for human moderators to prevent them from removing/flagging harmless posts.

Otherwise, you can bet that the weird content removals and account bans for posts that are innocuous will keep on happening. Right now, AI moderation has a lot of growing up to do, and it’s up to big names like Facebook, TikTok, and Instagram to tackle this mess head-on. If they don’t, they risk losing their audience to platforms that get it right.

Featured image AI-generated using Microsoft Designer

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.