Pavel Durov, CEO of Telegram, finally spoke out after a dramatic twelve-day silence following his arrest in France. In a lengthy post brimming with emojis and defiance, Durov blamed Telegram’s rapid growth for making it “easier for criminals to abuse our platform.”

Durov addressed the criminal charges brought against him, which relate to the distribution of child sexual abuse material on the platform. French authorities blamed Durov for allowing various forms of criminal activity on Telegram, which has built a reputation as a haven for privacy and minimal moderation. The platform has also been accused of harboring extremists and non-consensual deepfake images.

In his statement, Durov described his arrest as “surprising” and “unfair,” saying the charges were based on laws from a “pre-smartphone era” that unfairly held him responsible for third-party activities on Telegram. His core argument: charging CEOs for crimes committed by users would stifle innovation in the tech industry. “No innovator will ever build new tools if they know they can be personally held responsible for potential abuse of those tools,” he said.

A quiet policy shift at Telegram

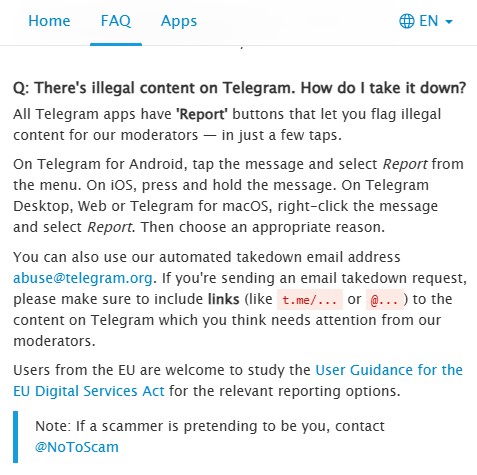

But while Durov was busy defending himself, something else was happening quietly in the background: Telegram updated its moderation policy. For the first time, users can now report private chats to moderators.

Telegram, which boasts nearly 1 billion users, had long stood by its commitment to user privacy, especially when it came to private chats. The FAQ page used to proudly declare, “We do not process any requests related to [private chats].” But that language has been quietly revised. Now, the FAQ informs users that they can use Telegram’s “Report” buttons to flag illegal content for moderators, even in private chats.

Telegram’s new moderation policy also includes an email address where users can send automated takedown requests by simply linking to the offending content. It’s still unclear how these changes will affect the platform’s ability to respond to law enforcement, though Telegram has previously complied with court orders under certain conditions.

This shift marks a significant change in Telegram’s approach to content moderation and privacy, signaling that Durov and his team are feeling the pressure to rein in illegal activity on the platform.

Growing pains and Big Tech’s crime problem

Durov admitted that Telegram’s massive growth — now serving 950 million users — has led to “growing pains” that make it easier for criminals to exploit the platform. However, he’s made it his “personal goal” to fix this and promised more details on moderation improvements soon.

The dilemma of balancing user privacy with content moderation isn’t new. Other tech giants have faced similar battles. Meta, for example, has struggled with policing harmful content across its platforms, from human trafficking to political disinformation. Elon Musk’s X has also been under fire for its inconsistent moderation policies, which critics say allow harmful content to spread unchecked.

Telegram’s new moderation tools and Durov’s promise to tackle the platform’s growing pains could signal a turning point for the company. But whether these changes will be enough to satisfy law enforcement agencies, or calm the waters with French authorities, remains to be seen.

For now, Durov continues to walk the tightrope between upholding user privacy and preventing illegal activity—a balancing act that has put Telegram at the center of global debates about free speech and crime on the internet. And in Durov’s own words, he’s ready to take the platform forward: “We’ve already started that process internally, and I will share more details on our progress with you very soon.”

Here’s the full statement:

❤️ Thanks everyone for your support and love!

Last month I got interviewed by police for 4 days after arriving in Paris. I was told I may be personally responsible for other people’s illegal use of Telegram, because the French authorities didn’t receive responses from Telegram.

This was surprising for several reasons:

1. Telegram has an official representative in the EU that accepts and replies to EU requests. Its email address has been publicly available for anyone in the EU who googles “Telegram EU address for law enforcement”.

2. The French authorities had numerous ways to reach me to request assistance. As a French citizen, I was a frequent guest at the French consulate in Dubai. A while ago, when asked, I personally helped them establish a hotline with Telegram to deal with the threat of terrorism in France.

3. If a country is unhappy with an internet service, the established practice is to start a legal action against the service itself. Using laws from the pre-smartphone era to charge a CEO with crimes committed by third parties on the platform he manages is a misguided approach. Building technology is hard enough as it is. No innovator will ever build new tools if they know they can be personally held responsible for potential abuse of those tools.

Establishing the right balance between privacy and security is not easy. You have to reconcile privacy laws with law enforcement requirements, and local laws with EU laws. You have to take into account technological limitations. As a platform, you want your processes to be consistent globally, while also ensuring they are not abused in countries with weak rule of law. We’ve been committed to engaging with regulators to find the right balance. Yes, we stand by our principles: our experience is shaped by our mission to protect our users in authoritarian regimes. But we’ve always been open to dialogue.

Sometimes we can’t agree with a country’s regulator on the right balance between privacy and security. In those cases, we are ready to leave that country. We’ve done it many times. When Russia demanded we hand over “encryption keys” to enable surveillance, we refused — and Telegram got banned in Russia. When Iran demanded we block channels of peaceful protesters, we refused — and Telegram got banned in Iran. We are prepared to leave markets that aren’t compatible with our principles, because we are not doing this for money. We are driven by the intention to bring good and defend the basic rights of people, particularly in places where these rights are violated.

All of that does not mean Telegram is perfect. Even the fact that authorities could be confused by where to send requests is something that we should improve. But the claims in some media that Telegram is some sort of anarchic paradise are absolutely untrue. We take down millions of harmful posts and channels every day. We publish daily transparency reports (like this or this). We have direct hotlines with NGOs to process urgent moderation requests faster.

However, we hear voices saying that it’s not enough. Telegram’s abrupt increase in user count to 950M caused growing pains that made it easier for criminals to abuse our platform. That’s why I made it my personal goal to ensure we significantly improve things in this regard. We’ve already started that process internally, and I will share more details on our progress with you very soon.

I hope that the events of August will result in making Telegram — and the social networking industry as a whole — safer and stronger. Thanks again for your love and memes 🙏

Featured image: Bloomberg

TechIssuesToday primarily focuses on publishing 'breaking' or 'exclusive' tech news. This means, we are usually the first news website on the whole Internet to highlight the topics we cover daily. So far, our stories have been picked up by many mainstream technology publications like The Verge, Macrumors, Forbes, etc. To know more, head here.